library(ggplot2)

library(viridis)

library(hrbrthemes)

library(cmdstanr)

library(bayesplot)

library(spatstat)

library(dplyr)

library(tidyr)

source("presence_only_functions.R")

source("surface_functions.R")5 Simulation and modelling

5.1 Implementation

The previous sections discussed the theoretical and practical aspects of data generation. We now proceed to implement the data generation procedure. We first generate observations at each site and create plots of observed intensities, bias, and counts. We then fit a Poisson Point Process to the generated data.

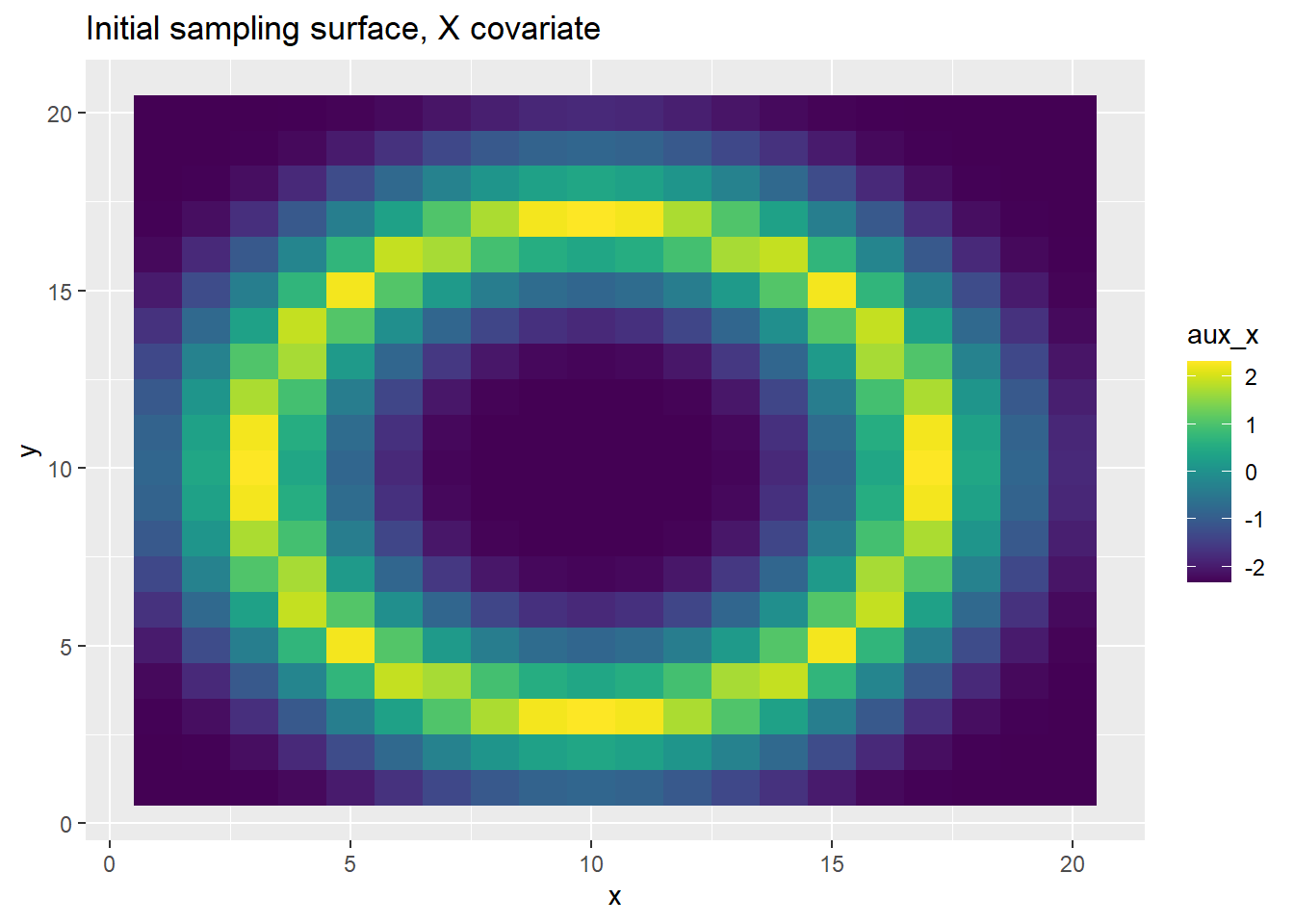

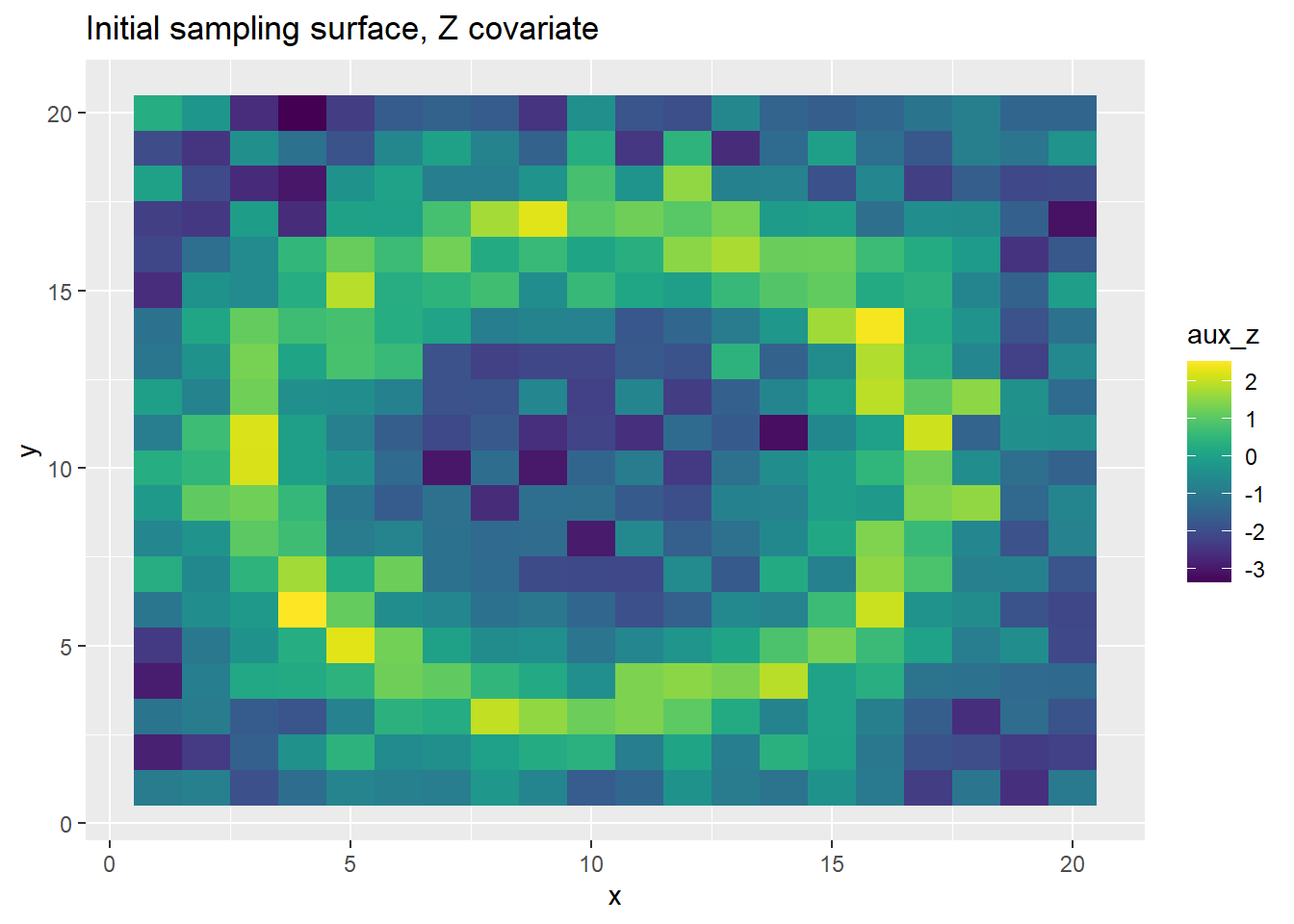

We create a ring-shaped environment based on two correlated covariates and generate PO points accordingly.

area_D <- 100

k <- 20

sites <- k^2

# Parameter values somewhere around Fithian 2015.

alpha <- -2

beta <- 2

gamma <- -2

delta <- 0.5

params <- list(alpha=alpha, beta=beta, gamma=gamma, delta=delta)

aux_cor <- 0.7

grid_points <- get_sampling_surface_donut(k)

grid_points <- get_bias_surface_correlated(grid_points, aux_cor)[1] "Correlation between X and Z"

[,1] [,2]

[1,] 1.0000000 0.8318977

[2,] 0.8318977 1.0000000

sites <- sites/4

grid_points <- grid_points %>% dplyr::filter(x<(k/2)+1, y<(k/2)+1)

# Filter for only a quarter of the grid.

# If we filter, we need to change the total sites as well

# Need distances for LGCP

distance_mat <- as.matrix(dist(grid_points[,1:2]))

data_reps <- 1

corr_matrix <- specify_corr(grid_points[,1:2])

gp_bool <- F

sim_data <- generate_ppp_data_r(grid_points, params, sites, data_reps, corr_matrix, gp_bool, area_D)

Y_positive_indices <- which(sim_data$Y>0)

stopifnot(sum(sim_data$Y[Y_positive_indices]) == nrow(sim_data$coordinates))

sim_data$Y_coords x y

1 8.875951 1.773338

2 8.418654 2.586547

3 7.682086 2.968490

4 9.443523 2.937034

5 9.232656 3.004253

6 9.419722 3.417642

7 9.369643 2.645977

8 10.242691 3.446854

9 10.425511 3.301856

10 9.850484 2.680007

11 5.661172 4.209399

12 6.244311 4.384666

13 6.620891 3.542528

14 6.521558 3.645729

15 9.643957 3.970256

16 4.693362 4.684367

17 5.261157 4.808354

18 7.162766 4.505626

19 4.276170 5.821939

20 3.873761 5.525741

21 4.134683 6.405719

22 1.843085 7.150085

23 2.543938 7.697512

24 2.708596 7.552906

25 2.873088 9.258479

26 2.668776 8.519745

27 3.293108 8.620217

28 2.620333 10.064382

29 3.022244 9.757110

30 3.112645 10.053107grid_points[Y_positive_indices,] x y aux_x aux_z

19 9 2 0.3425419 0.25429287

28 8 3 1.7474542 2.01497588

29 9 3 2.2578390 1.58412306

30 10 3 2.3263479 1.22750804

36 6 4 1.9218166 1.24545874

37 7 4 1.7189159 1.11232836

40 10 4 0.4314067 -0.43573796

45 5 5 2.2578390 2.30065458

47 7 5 0.1949836 0.02354021

54 4 6 1.9218166 2.54440191

62 2 7 -0.2854436 -0.59967665

73 3 8 1.7474542 1.04834902

83 3 9 2.2578390 1.26880450

93 3 10 2.3263479 2.20702428The above output shows the exact location of the generated points and the associated \(x\) and \(z\) covariate values.

# Use the final generation iteration

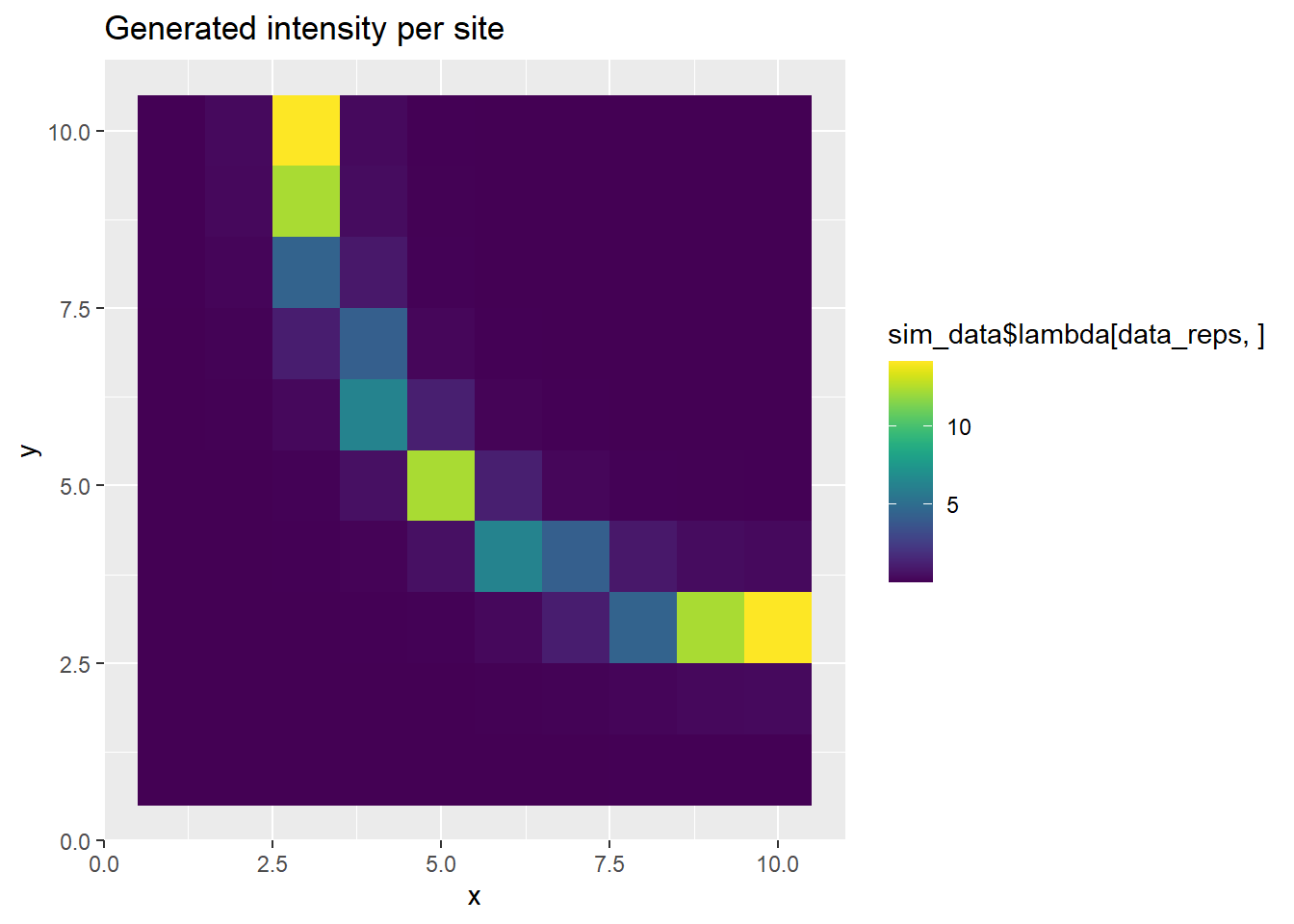

p <- ggplot(grid_points, aes(x, y, fill=sim_data$lambda[data_reps,])) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Generated intensity per site")

print(p)

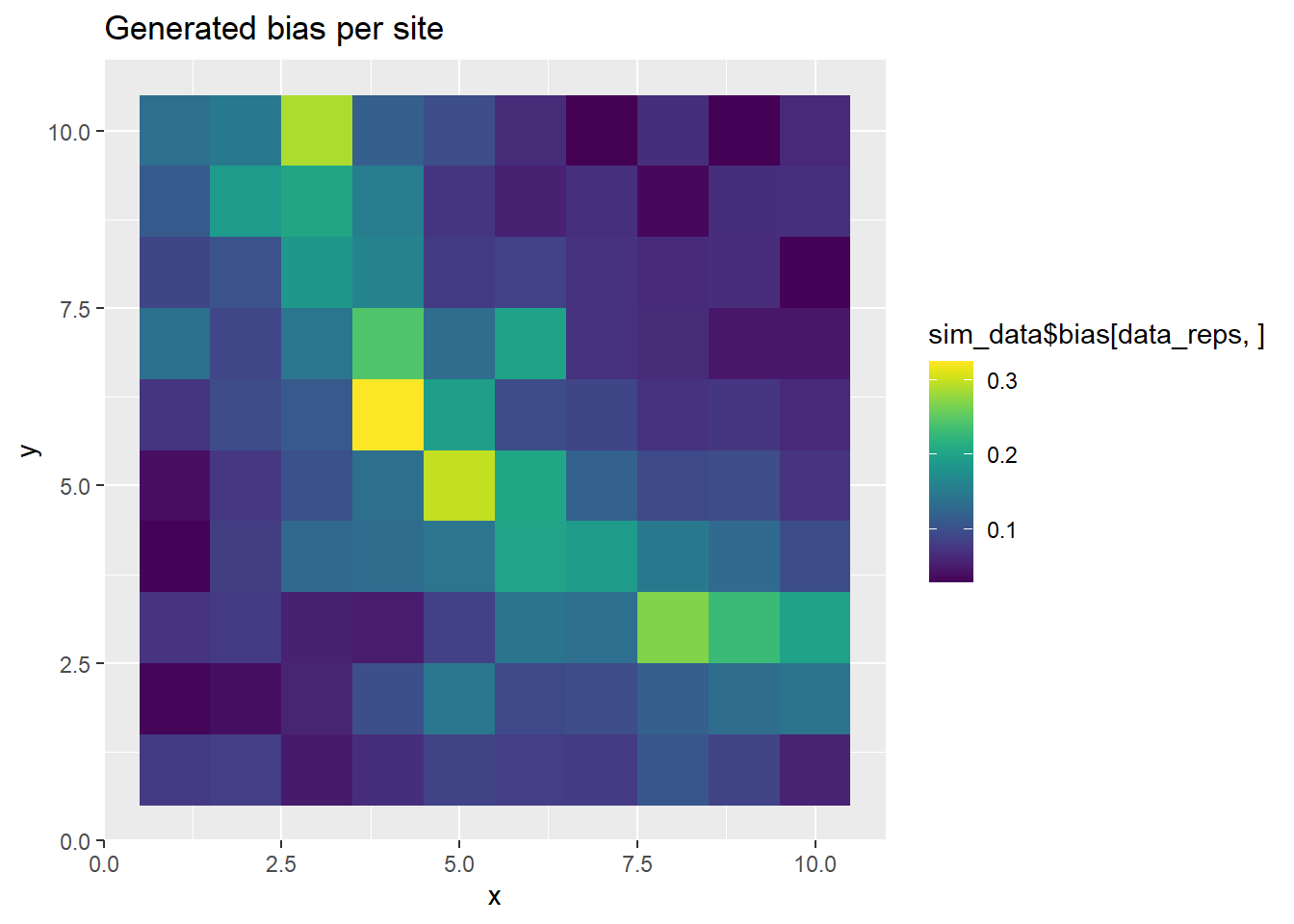

p <- ggplot(grid_points, aes(x, y, fill=sim_data$bias[data_reps,])) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Generated bias per site")

print(p)

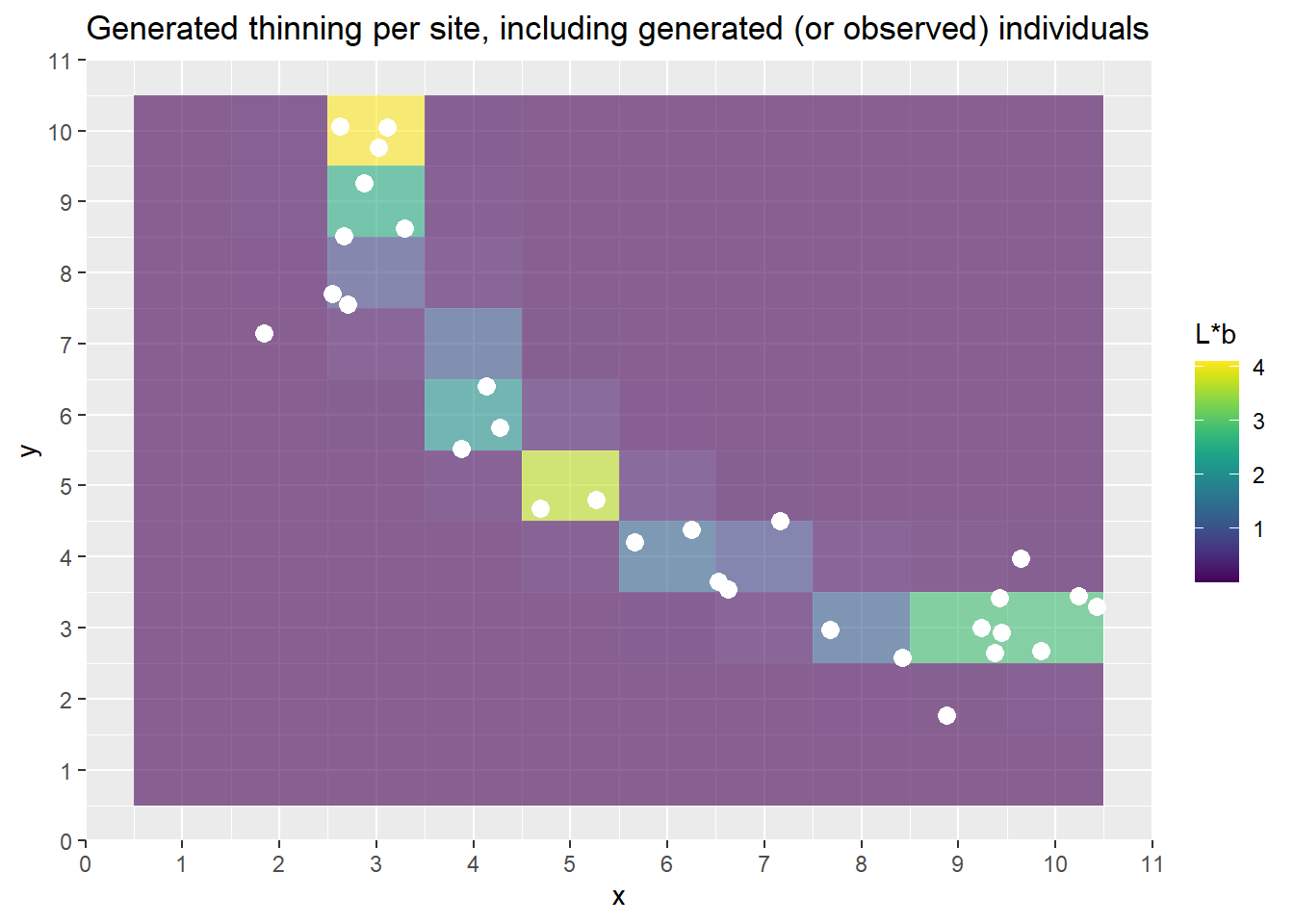

thinned_intensity <- sim_data$lambda[data_reps,]*sim_data$bias[data_reps,]

p <- ggplot() +

geom_tile(grid_points, mapping=aes(x, y, fill=thinned_intensity, width=1, height=1), alpha=.6) +

scale_fill_viridis(discrete=FALSE, name="L*b") +

ggtitle("Generated thinning per site, including generated (or observed) individuals") +

geom_point(data=sim_data$Y_coords, mapping=aes(x=x, y=y), size=3, col="white") +

theme(panel.grid.minor = element_line(colour="white")) +

scale_y_continuous(breaks = seq(0, 20, 1)) +

scale_x_continuous(breaks = seq(0, 20, 1))

print(p)

The above plots show the PO points and the ring-shaped surface.

Next we fit the Thinned Nonhomogeneous Poisson Process model in Stan. We can make a few observations about this model. First, examine the likelihood: \[L(\lambda|Y) = \prod^{N}_{i=1}\frac{\lambda_i^{n_i}\exp[-\lambda_i]}{n_i!}\]. This is made using the assumption that the counts at sites \(s_i\) are distributed following a poisson process with mean \(\lambda\). This assumption allows for a simpler specification of the likelihood, since we can use the poisson distribution. The setting of \(|A|=1\) allows us to make this assumption, as we do not need to multiple the intensity parameter \(\lambda\) by the area in the model.

The next sections of code fitting and diagnosing the poisson process model in Stan, using the generated data.

model_string <- stan_poisson_process

write(model_string, model_path)

# data_reps is 1 here so just use the first index

data = list(N = sites, X = grid_points$aux_x, Z = grid_points$aux_z, y = sim_data$Y[data_reps,])

model <- cmdstan_model(model_path)

fit <- model$sample(data=data, seed=13, chains=3, iter_sampling=2000, iter_warmup=200)The above code fits the table. We next examine the fit.

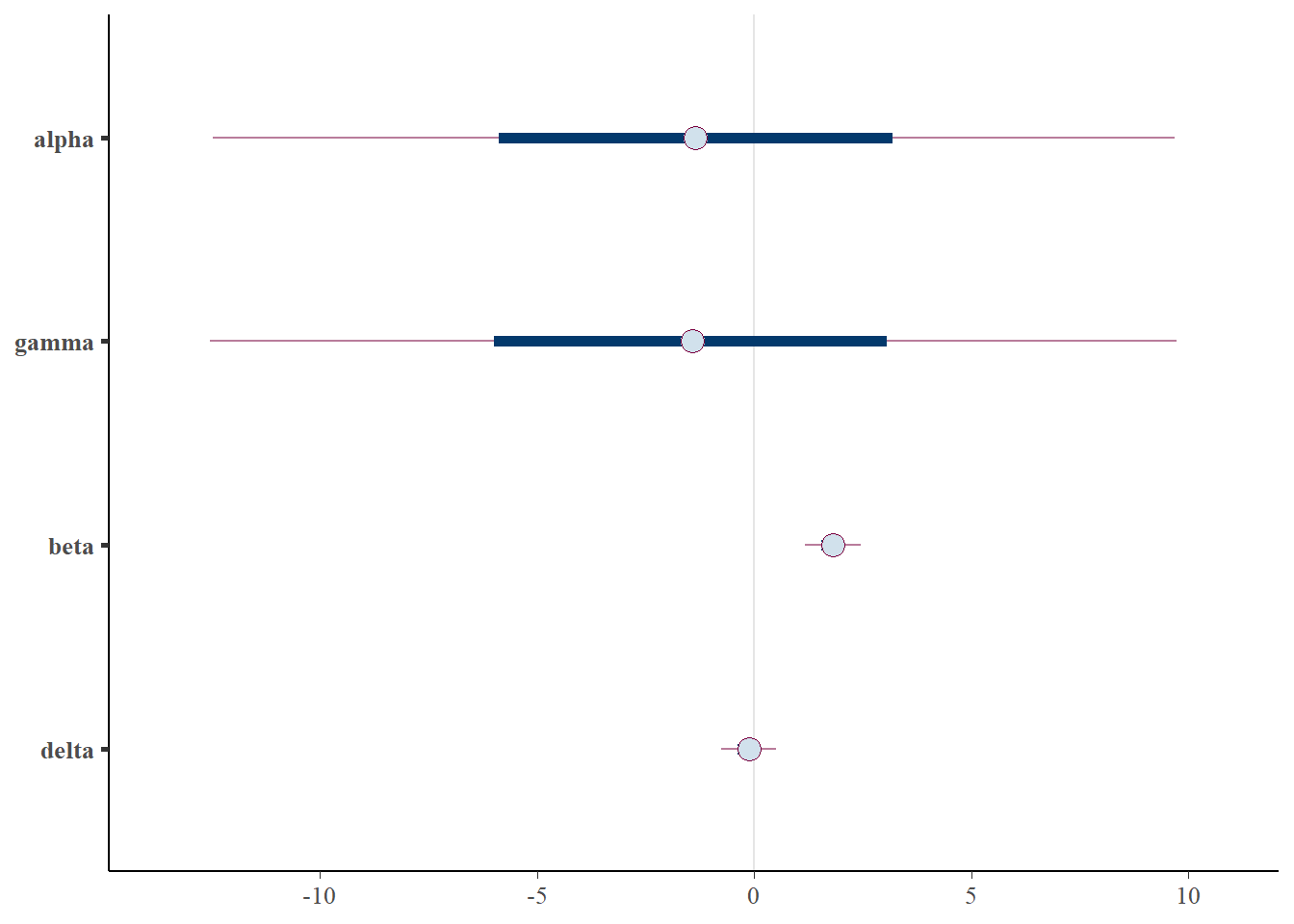

fit$summary(variables=c('alpha', 'beta', 'gamma', 'delta'))# A tibble: 4 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 alpha -1.36 -1.37 6.74 6.72 -12.5 9.67 1.00 1995. 2148.

2 beta 1.81 1.81 0.386 0.372 1.18 2.45 1.00 2314. 2537.

3 gamma -1.42 -1.43 6.73 6.72 -12.5 9.73 1.00 2019. 2187.

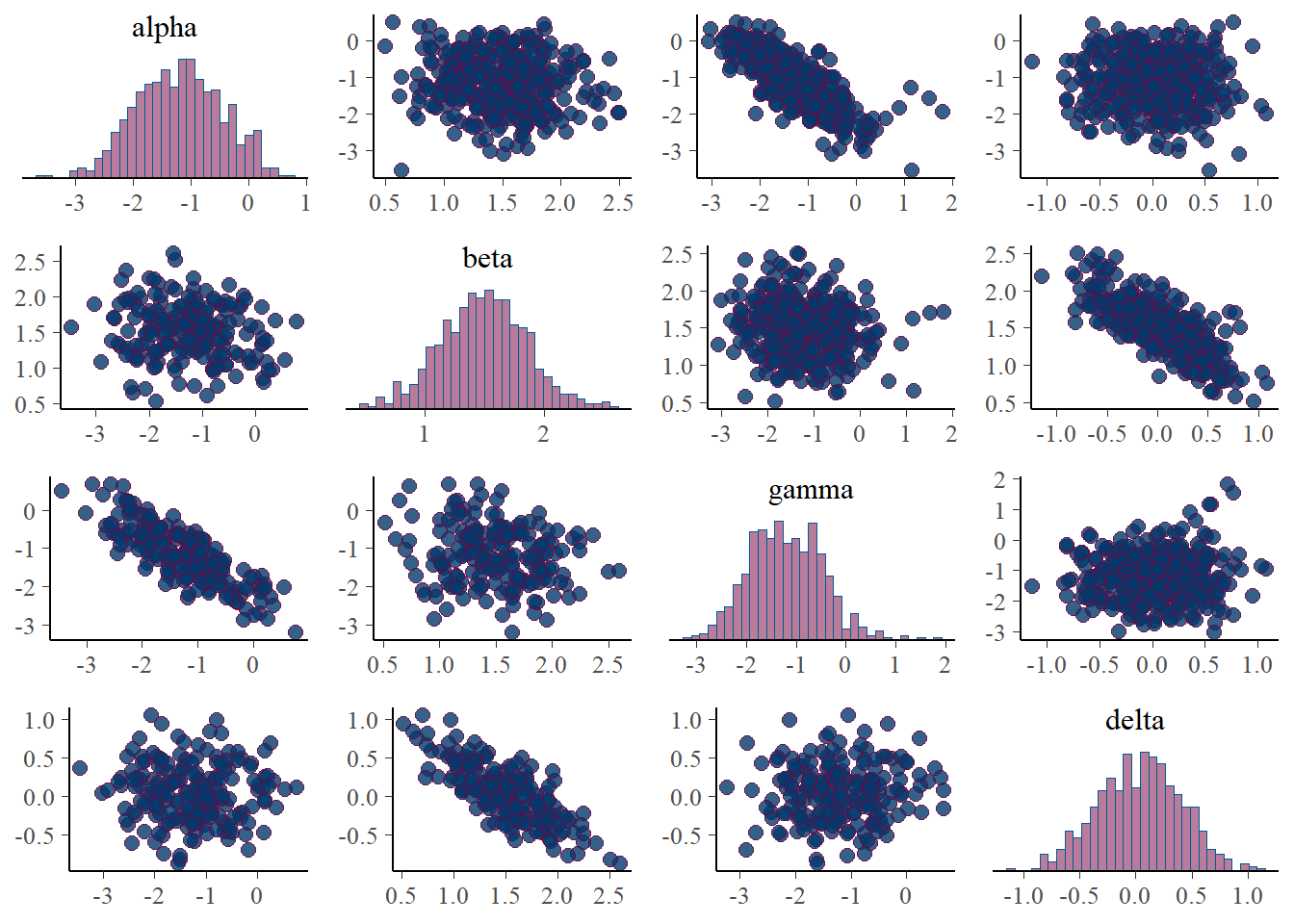

4 delta -0.126 -0.119 0.377 0.382 -0.761 0.496 1.00 2401. 2583.From the table above, we can observe that the parameters \(\beta\) and \(\delta\) have been recovered. However, due to the correlation of \(\alpha\) and \(\gamma\), only the sum \(\alpha+\gamma\) can be correctly identified. Our “true” sum of was equal to -4, and we can see the estimate here is \(~4.7\), which is reasonable given we incorporate priors and are estimating correlated parameters. The correlation between the two can be observed in the scatter plots below, as well as in the wide confidence interval of the posterior, relative to the other parameters.

5.2 Diagnostics

Here we check goodness of fit and Posterior Predictive Checks.

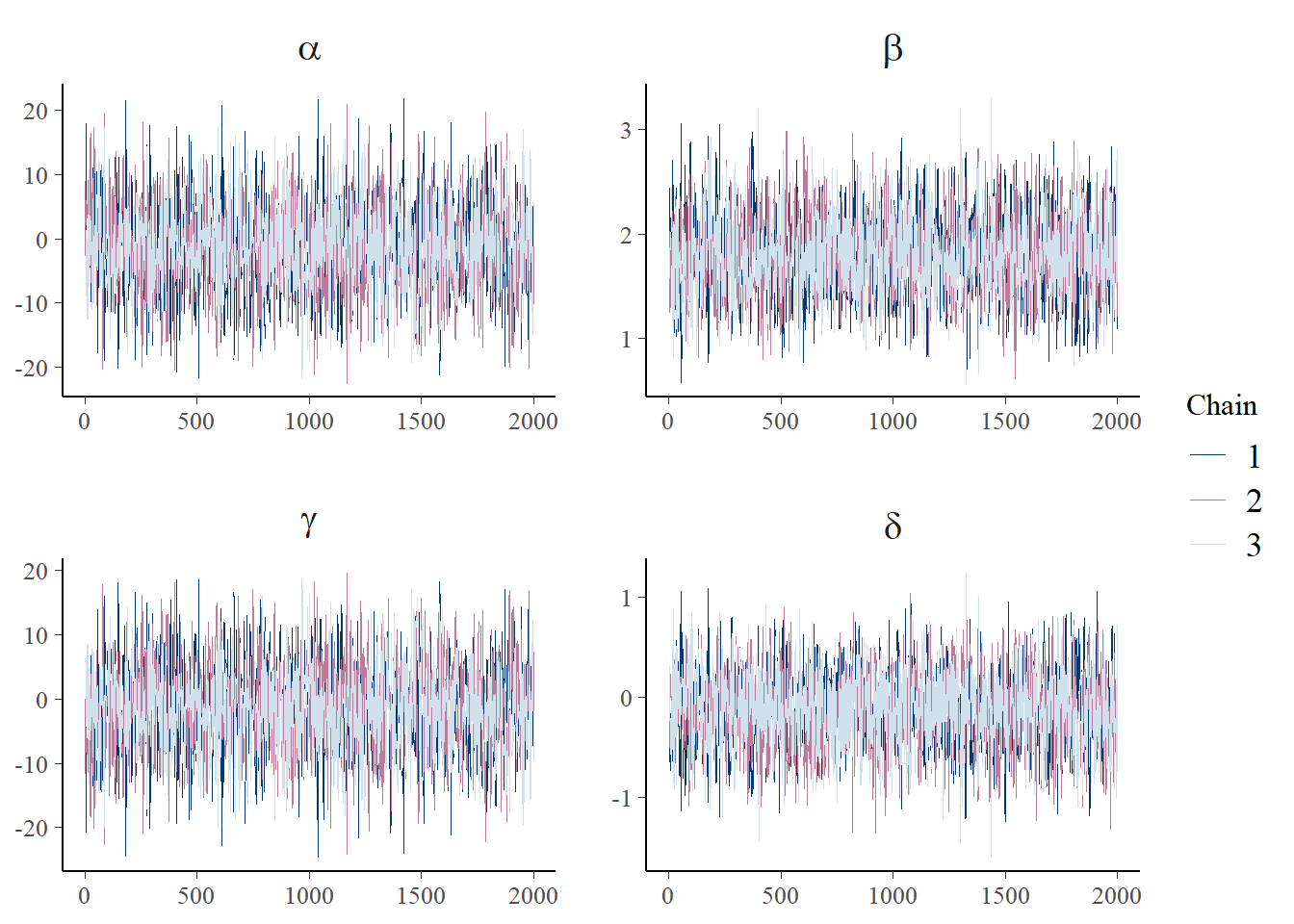

param_vec <- c('alpha', 'gamma', 'beta', 'delta')

posterior <- fit$draws(variables=param_vec)

color_scheme_set("mix-blue-pink")

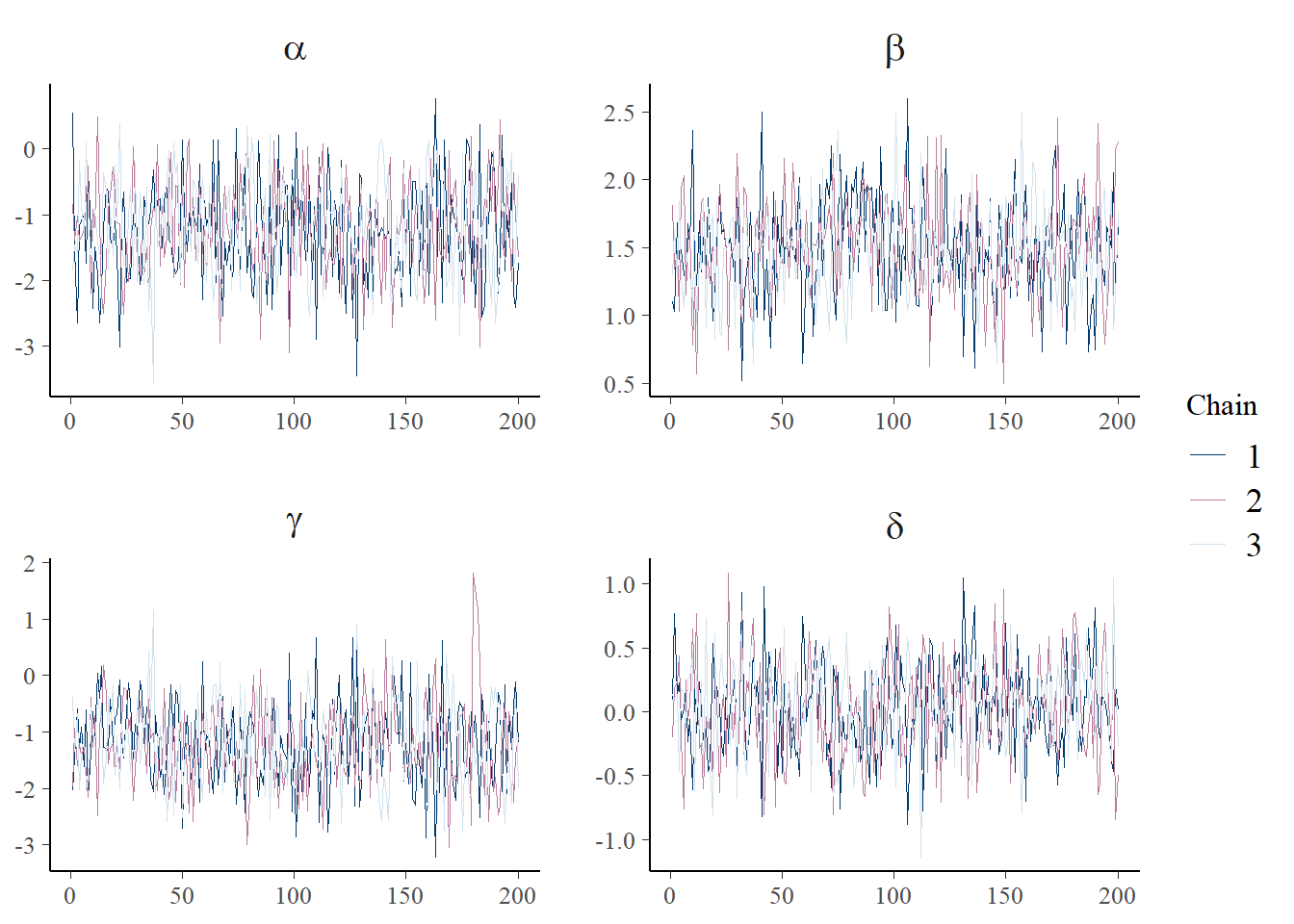

p_trace <- mcmc_trace(posterior, pars = c("alpha", "beta", "gamma", "delta"),

facet_args = list(nrow = 2, labeller = label_parsed))

print(p_trace + facet_text(size = 15))

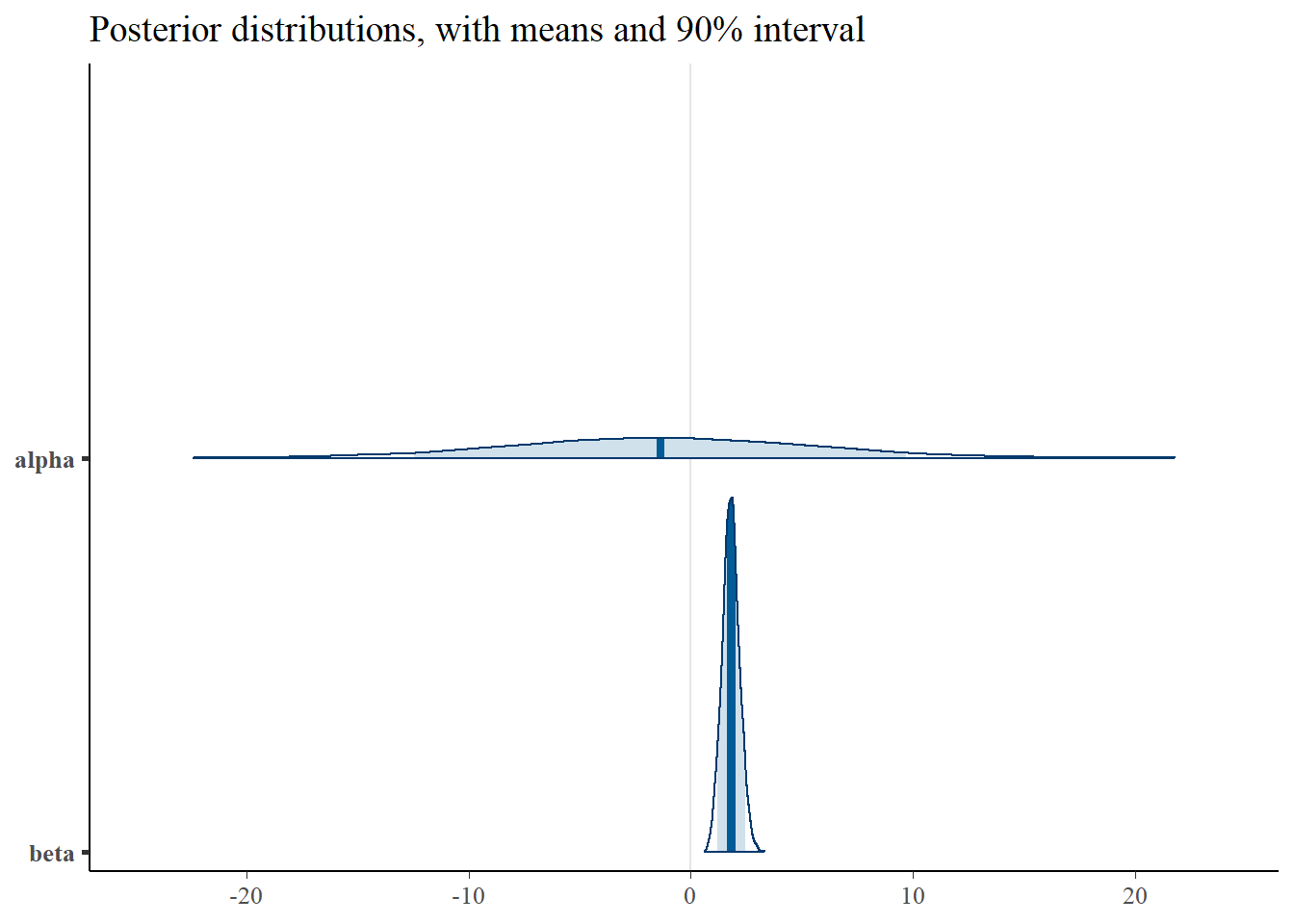

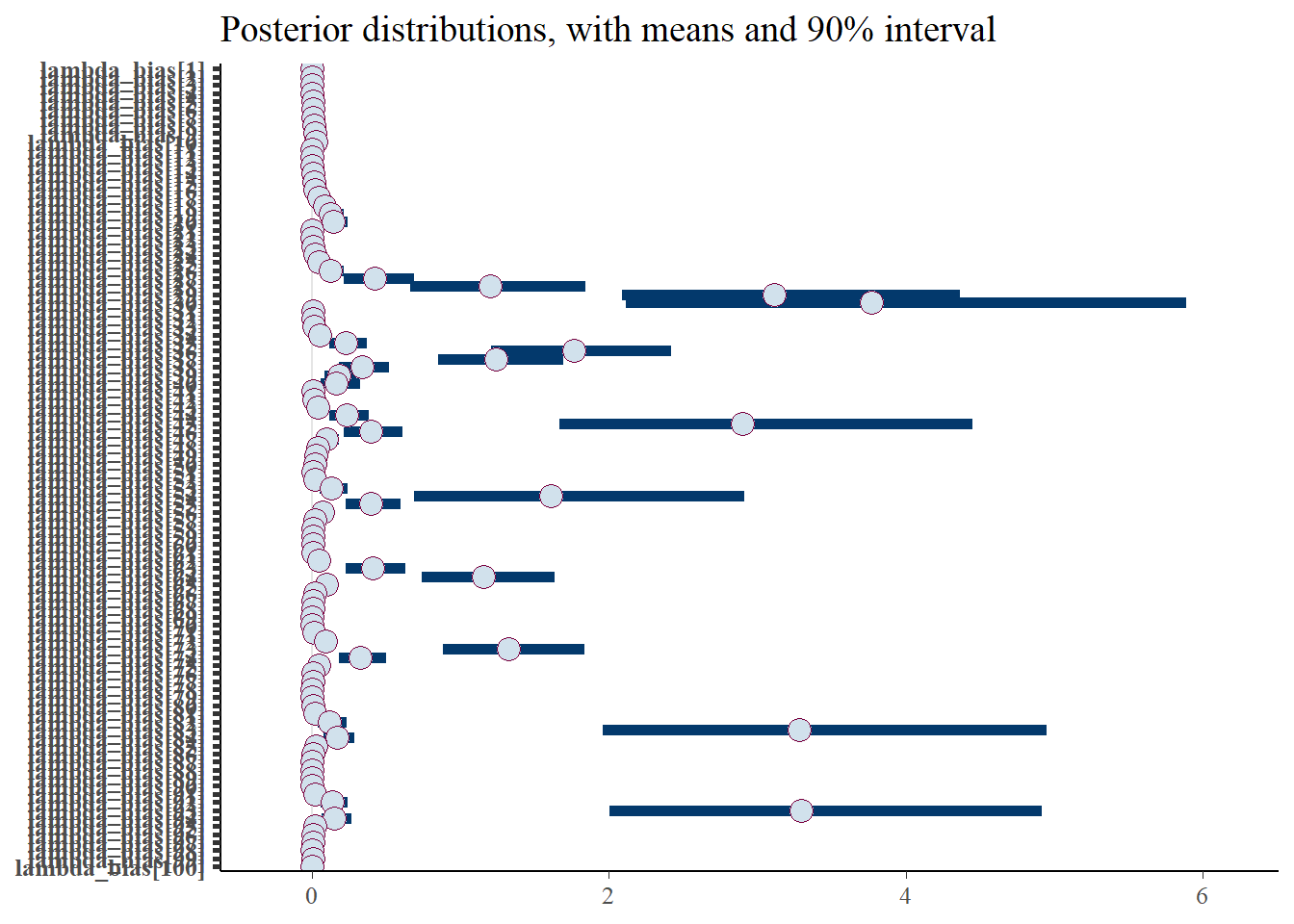

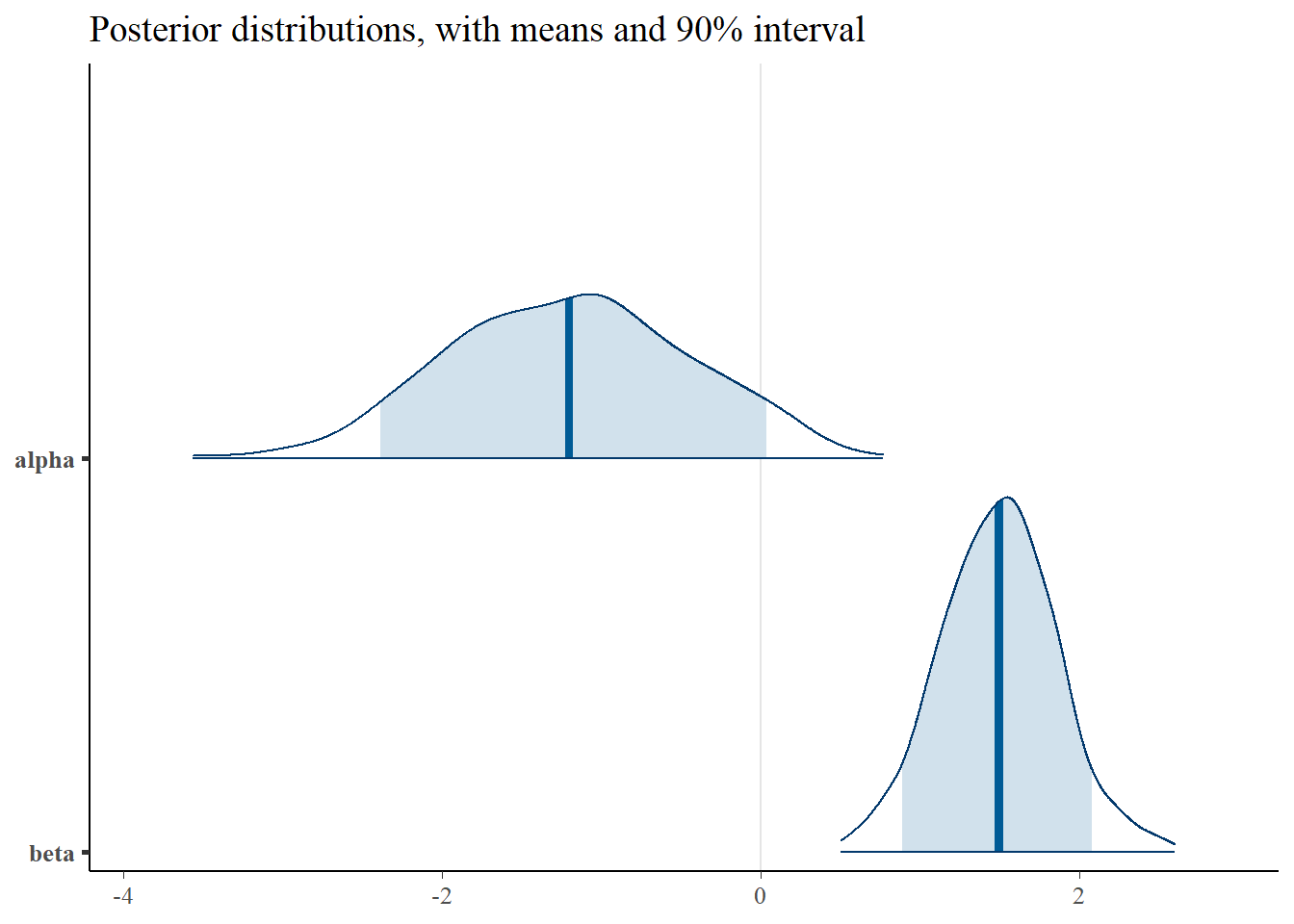

plot_title <- ggtitle(paste("Posterior distributions, with means and 90% interval"))

p_post <- mcmc_areas(posterior, prob = 0.9, point_est="mean", regex_pars = c("alpha", "beta")) + plot_title

print(p_post)

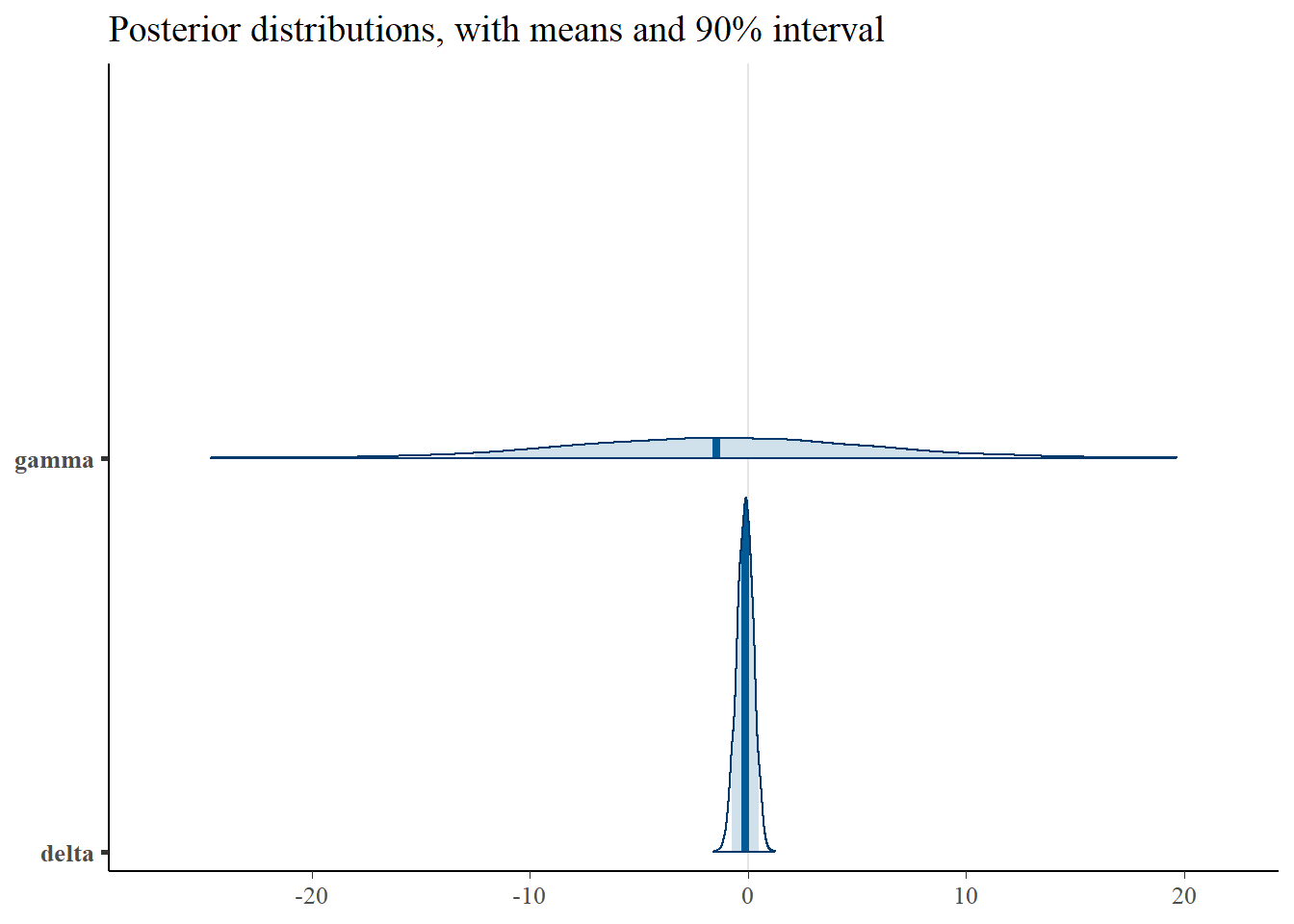

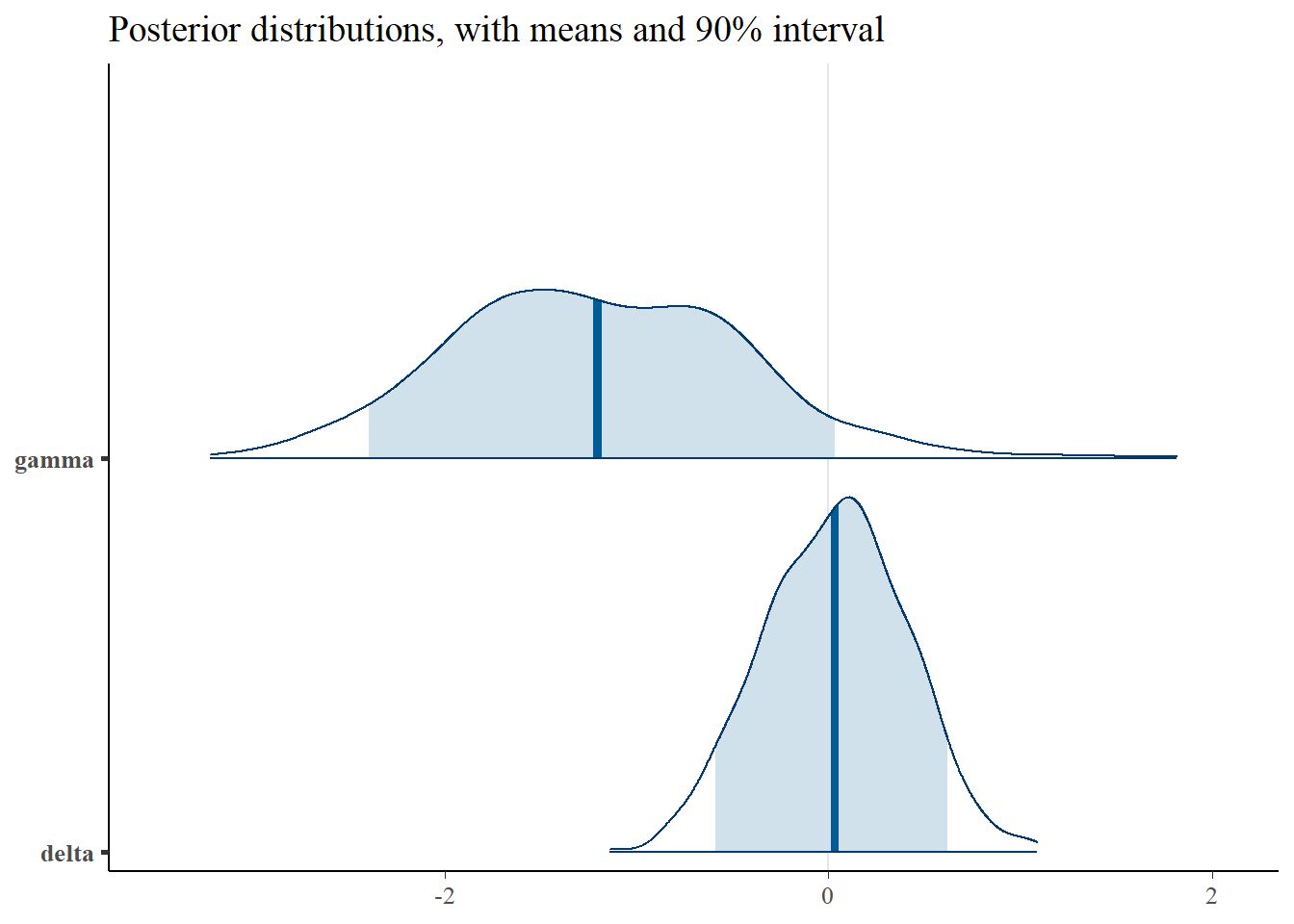

plot_title <- ggtitle(paste("Posterior distributions, with means and 90% interval"))

p_post <- mcmc_areas(posterior, prob = 0.9, point_est="mean", regex_pars = c("gamma", "delta")) + plot_title

print(p_post)

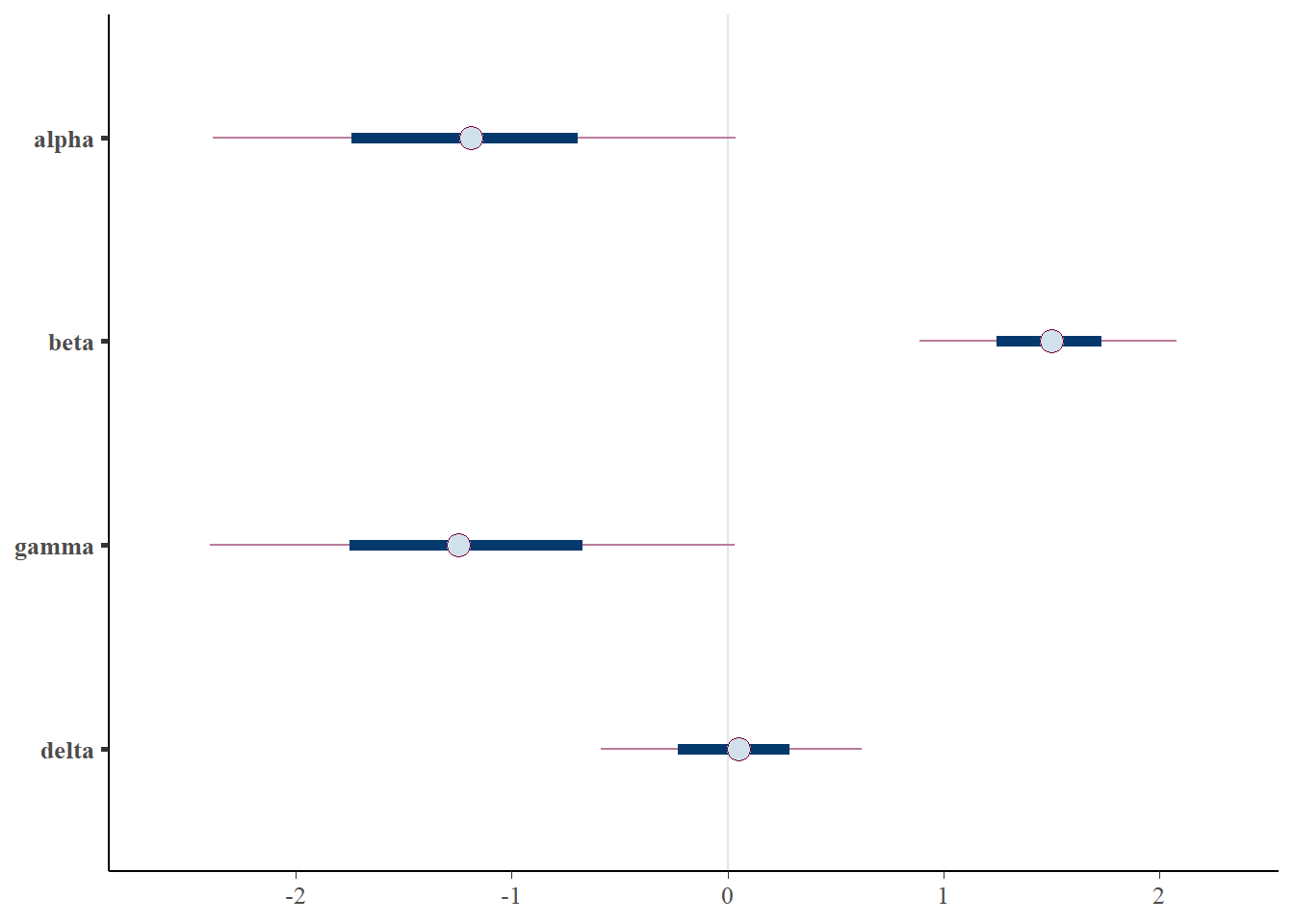

mcmc_intervals(posterior)

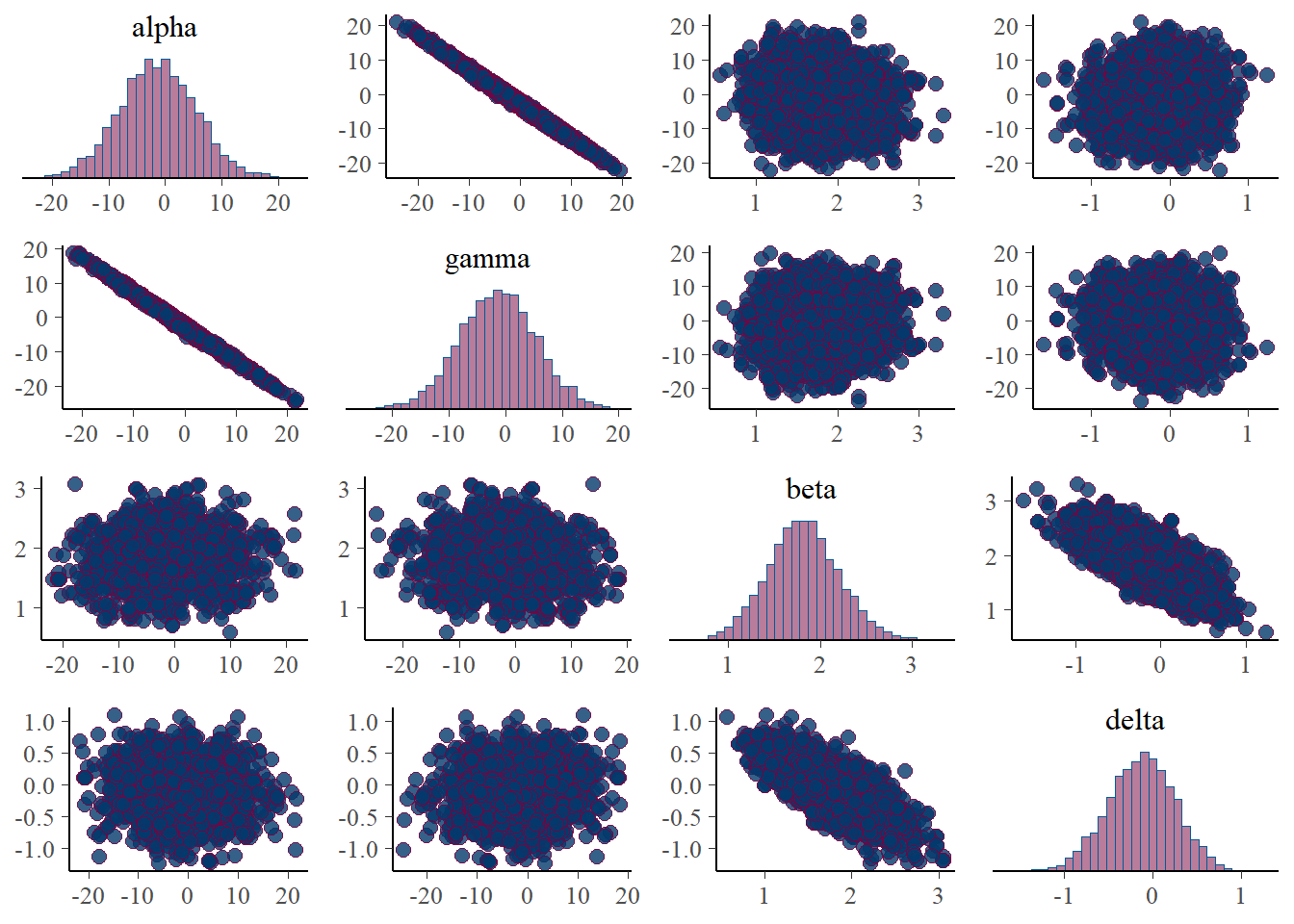

Importantly, note the wide credible intervals on \(\alpha\) and \(\gamma\). Why? See the scatter plots below.

plot_title <- ggtitle("Parameter posterior sample correlation")

p_post <- mcmc_pairs(posterior)

print(p_post)

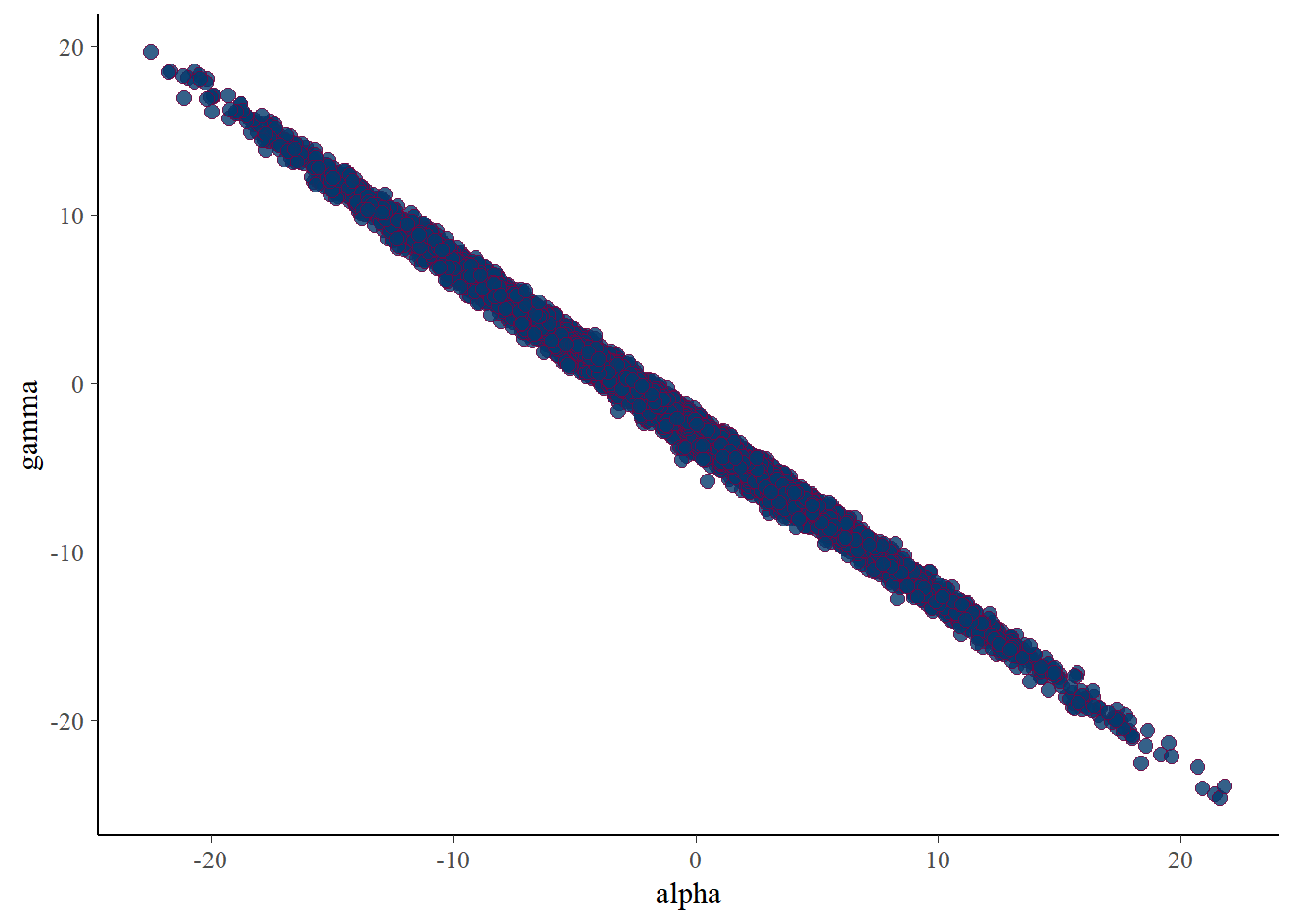

mcmc_scatter(posterior, pars=c('alpha','gamma'))

There is near perfect correlation between \(\alpha\) and \(\gamma\), as expected.

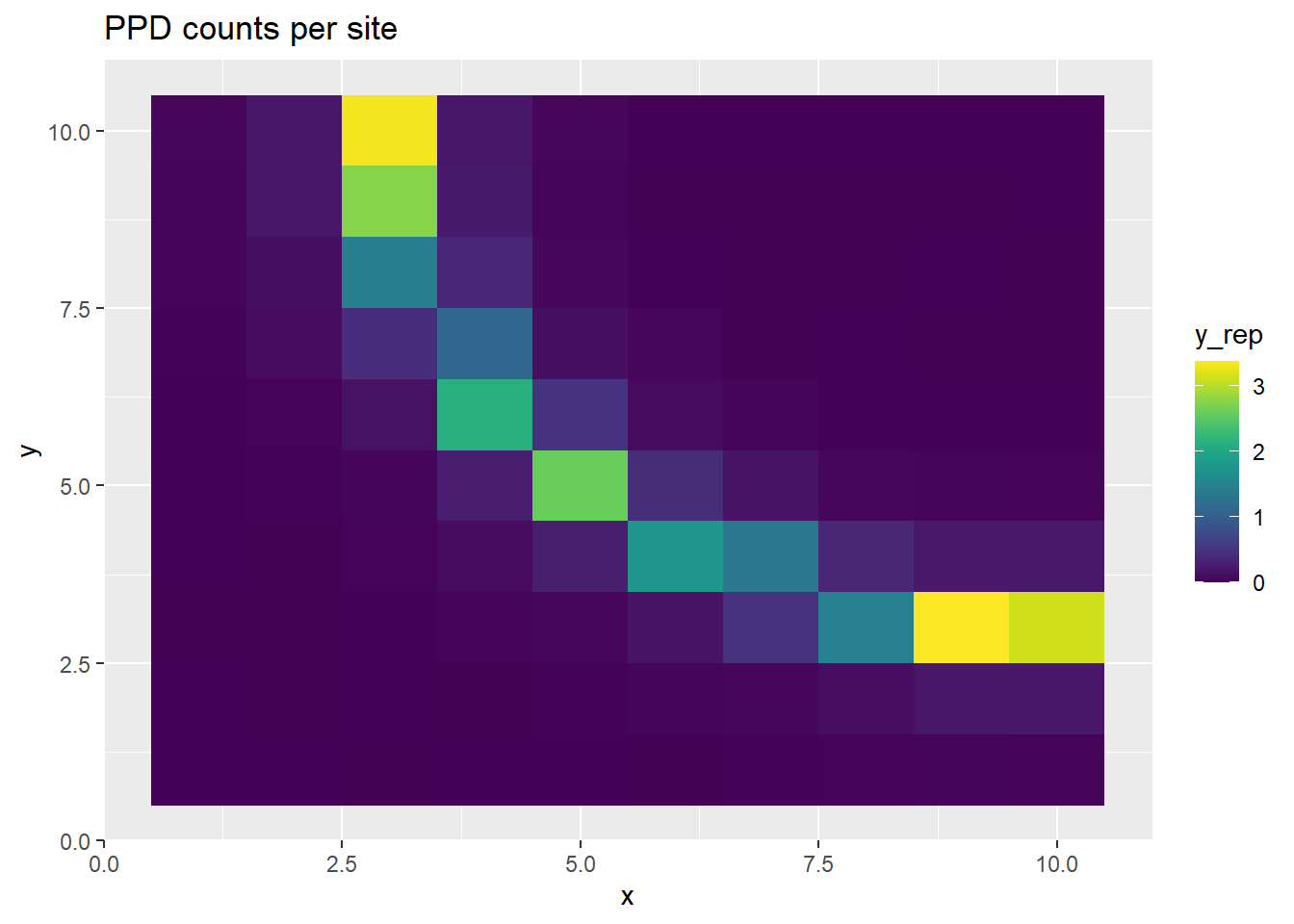

5.3 PPD

We next look at the Posterior Predictive Distribution (PPD) of the observations per site. That is, we plot the generated counts \(Y_i\) for site \(s_i\) on the sampling surface (grid).

The PPD can be expressed as the samples from the predictive distribution of \(Y\) taking into account the uncertainty surrounding the parameters. The PPD can be written as \[ p(\tilde{y}|Y) = \int p(\tilde{y}|\lambda, b, Y)p(\lambda, b|Y) dbd\lambda \]

such that the posterior uncertainty of the parameters is integrated out.

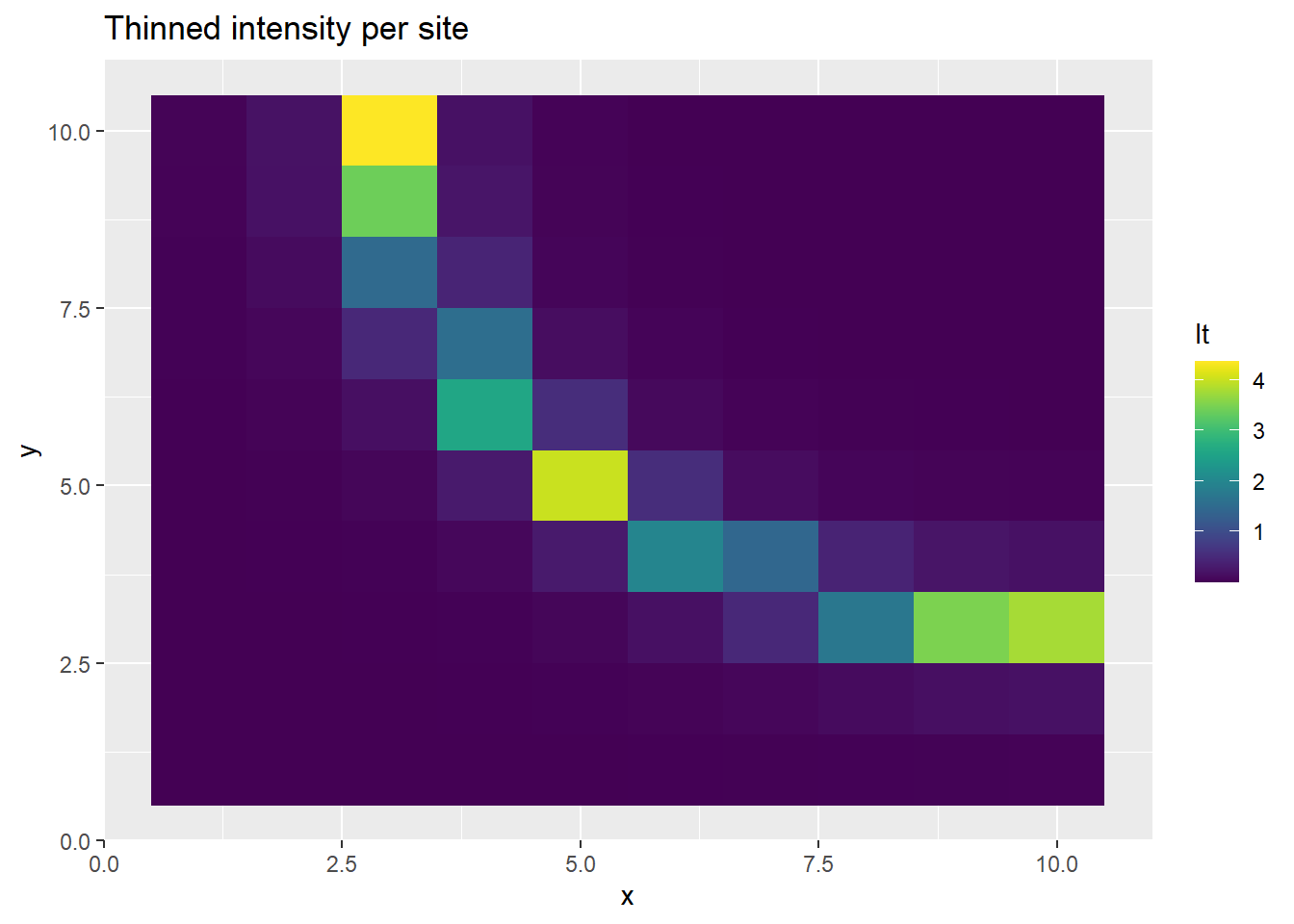

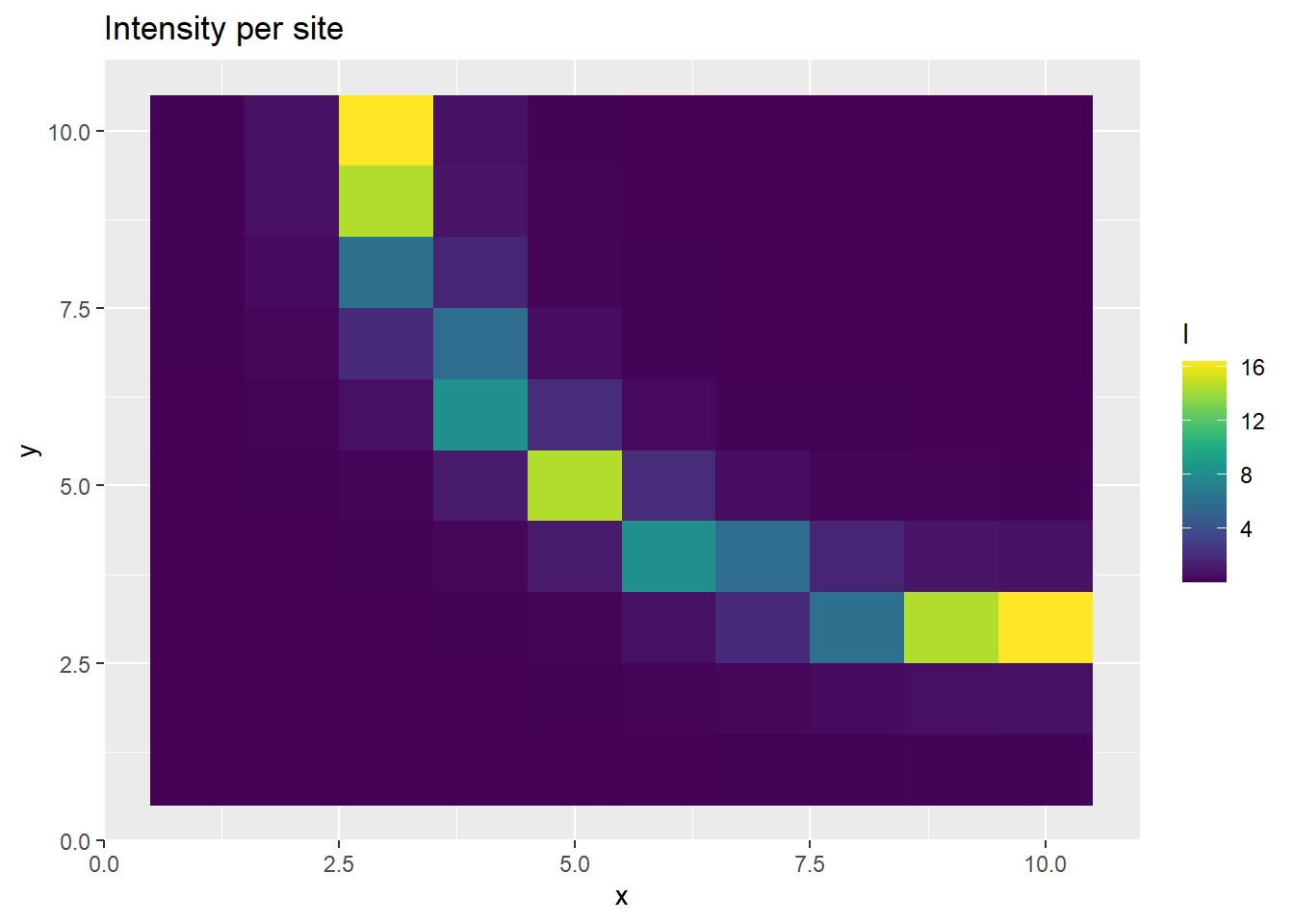

Below we plot the generated values of \(\tilde{y}\) for each site. Additionally, the generated quantities of intensity, bias, and intensity*bias ($b) per site are also plotted on the grid.

generated_vars <- c('lambda_bias', 'lambda_rep', 'b_rep', 'y_rep')

lambda_thinned <- fit$summary(variables=generated_vars[1])$mean

lambda_rep <- fit$summary(variables=generated_vars[2])$mean

bias_rep <- fit$summary(variables=generated_vars[3])$mean

y_ppd <- fit$summary(variables=generated_vars[4])$mean

ppd_df <- data.frame(x=grid_points$x, y=grid_points$y, y_rep=y_ppd, lt=lambda_thinned, l=lambda_rep, b=bias_rep)

head(ppd_df) x y y_rep lt l b

1 1 1 0.002000000 0.001857518 29666.24 243090.3

2 2 1 0.001166667 0.001859585 29731.43 243665.1

3 3 1 0.001666667 0.002067503 30430.25 263499.0

4 4 1 0.001333333 0.002184711 34790.56 244657.8

5 5 1 0.003500000 0.003014073 50946.62 245093.8

6 6 1 0.005166667 0.005084997 90423.75 243710.1It is important to notice that while the thinned intensity is ostensibly realistic in estimation, the intensity and bias are not. This is due to the specification of the Thinned Poisson Process \[\lambda^* = \lambda(s)b(s)\] such that \[\lambda^* = \exp\left[\alpha + \beta*X(s) + \gamma + \delta*Z(s)\right]\]

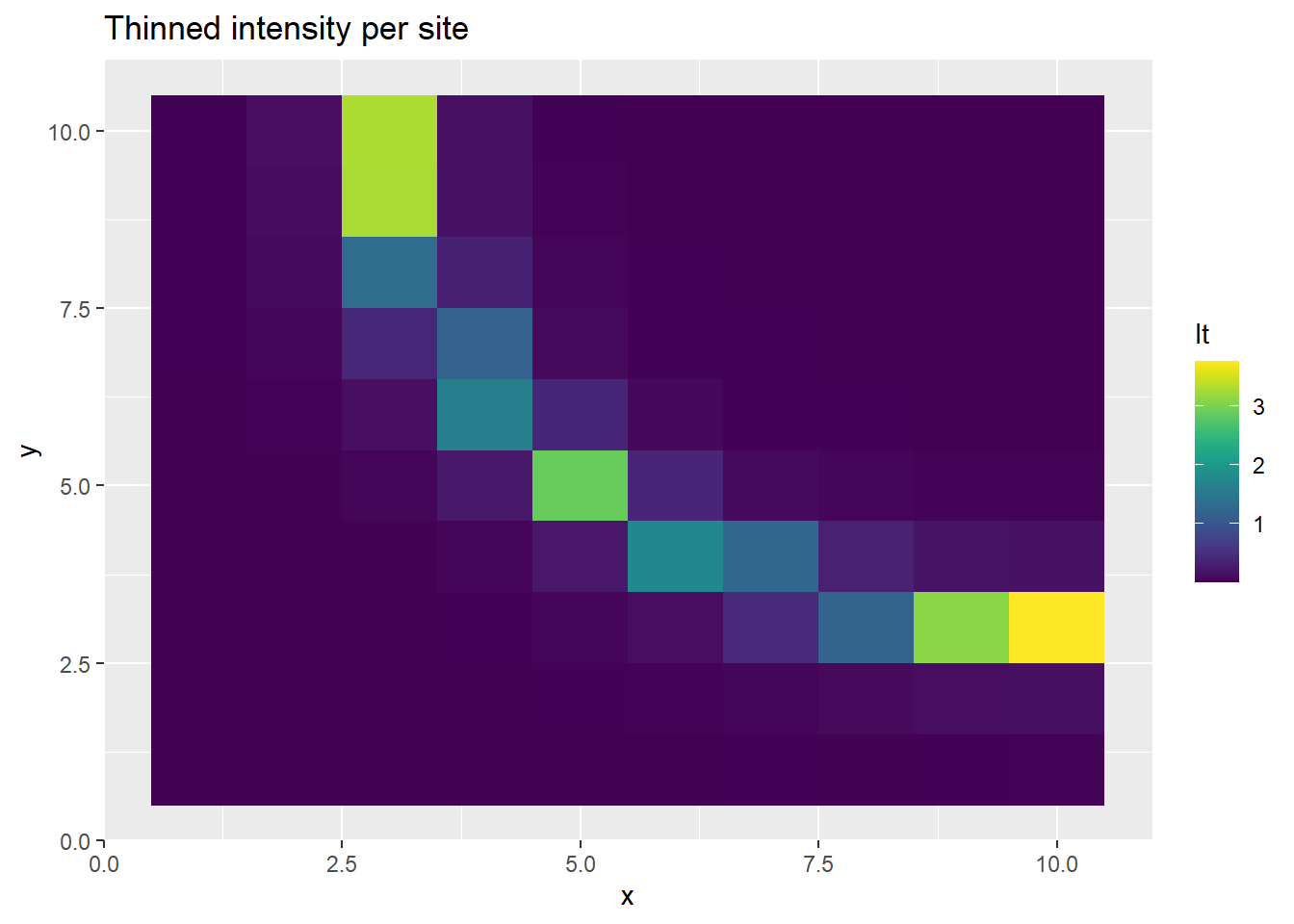

We can then see the generated data in the sampling surface:

p <- ggplot(ppd_df, aes(x, y, fill=y_rep)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("PPD counts per site")

print(p)

p <- ggplot(ppd_df, aes(x, y, fill=lt)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Thinned intensity per site")

print(p)

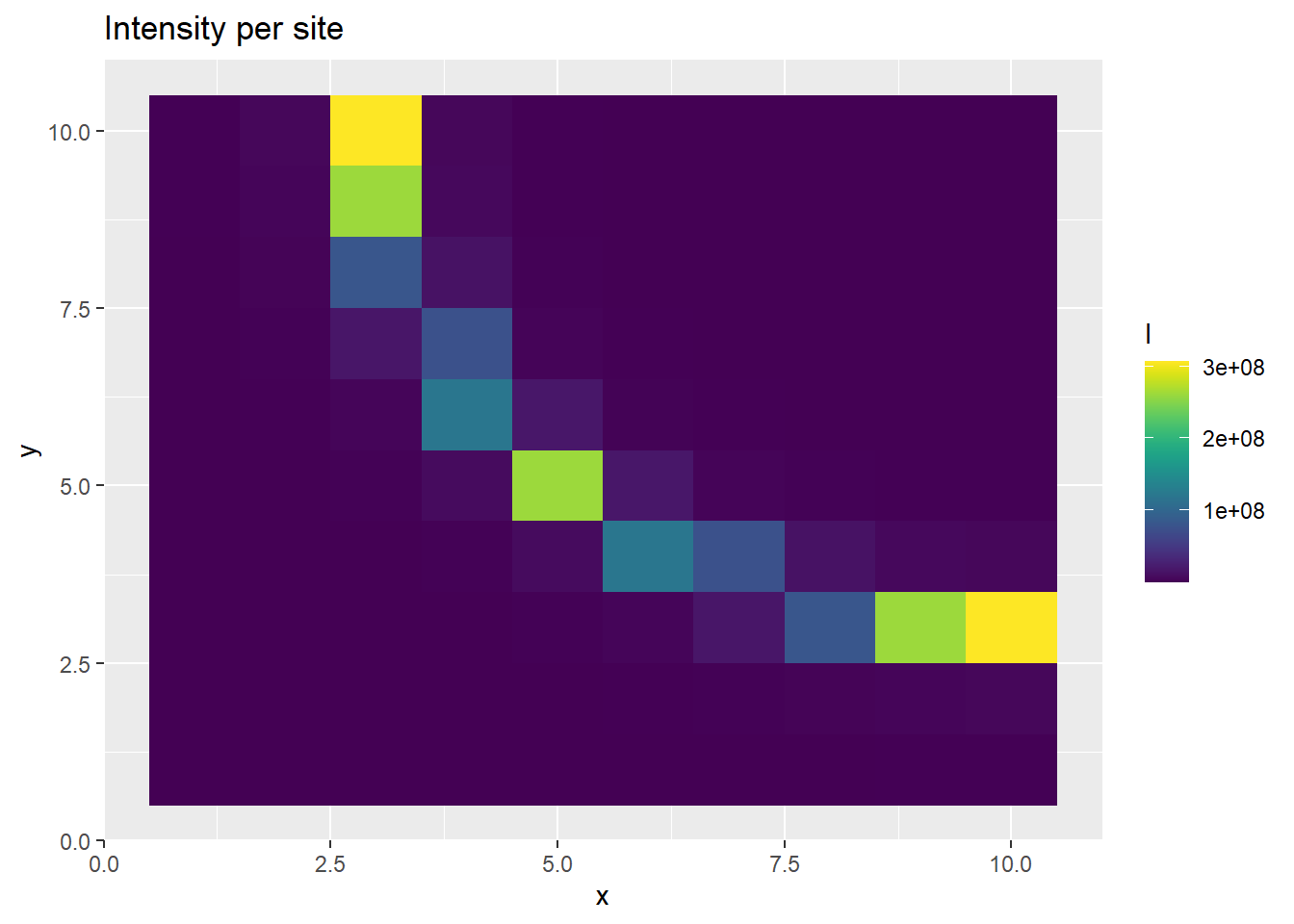

p <- ggplot(ppd_df, aes(x, y, fill=l)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Intensity per site")

print(p)

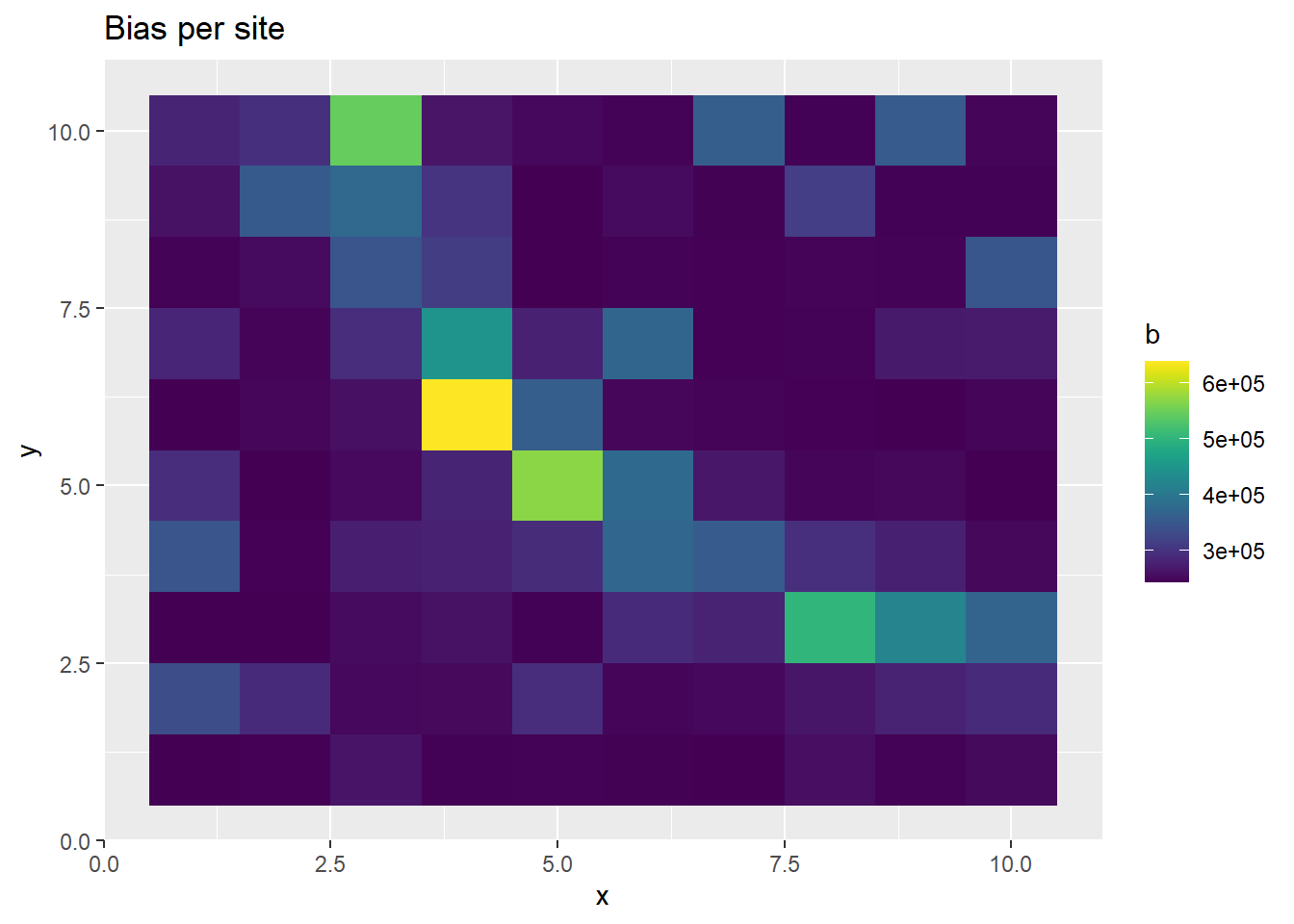

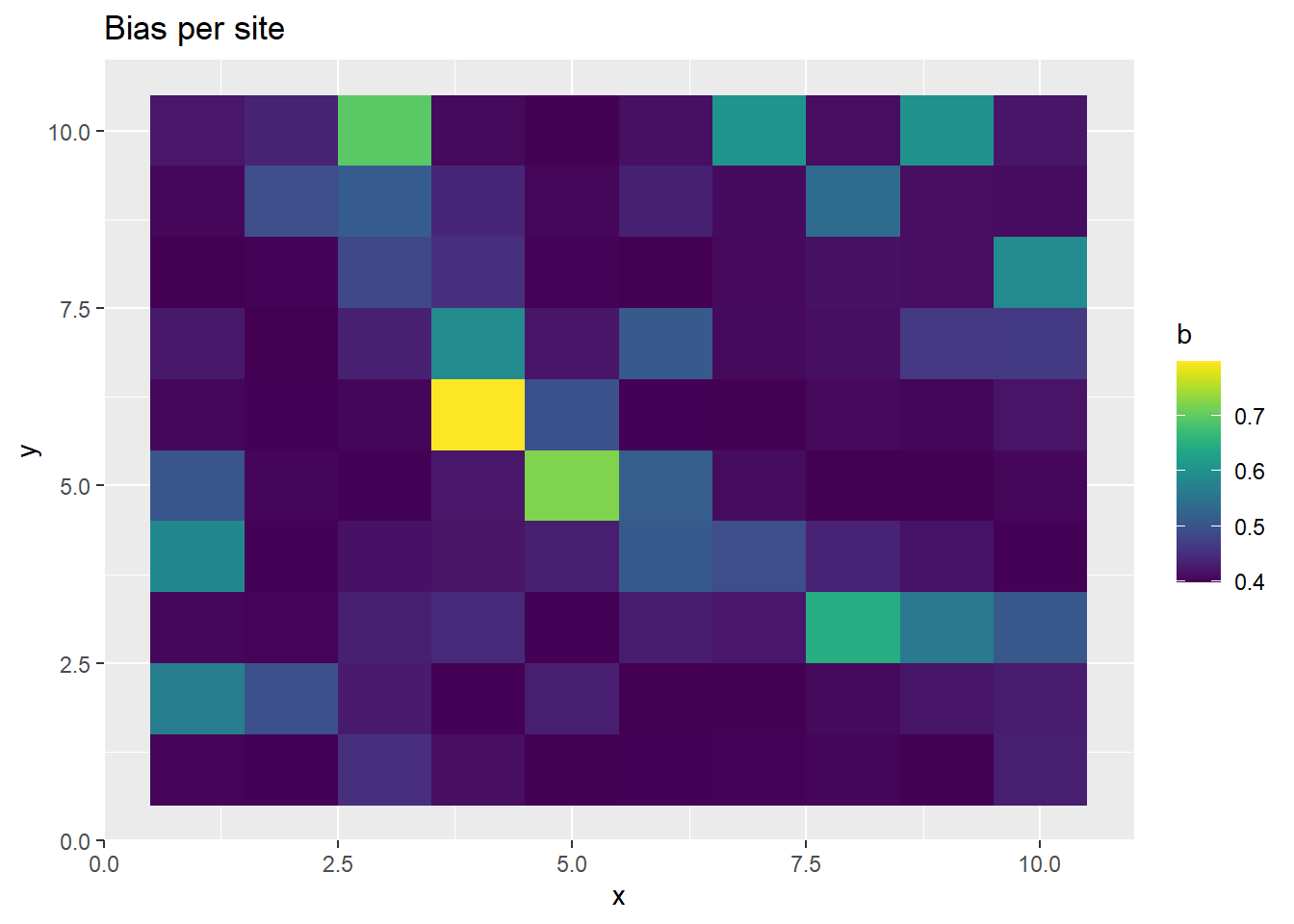

p <- ggplot(ppd_df, aes(x, y, fill=b)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Bias per site")

print(p)

The prediction of PO observatiosn at each site is realistic, as is the thinned intensity. But alone, the intensity and bias predictive disitributions are poorly estimated.

5.4 Correlation between coefficients

In the above plots, it seems that the bias and unthinned intensity are behaving strangely, even though the thinned intensity is generated as we would expect. This is a manifestation of the completely correlated parameters describing the behavior of the intensity and the bias. We can notice that the generated bias and intensity alone are both really large. The issue with the large values appears this way because we are taking the mean of the posterior distribution as our point estimate and showing that in the plot of the sites above. However, when we generate the thinned intensity, we multiply each draw from the posterior predictive chain together. So even though the mean of the posterior chain is high, the individual draws of the bias and intensity inversely fluctuate around each other, due the high correlation between the two parameters.

We take a look at the posterior intervals of the estimates to get a little better idea of this.

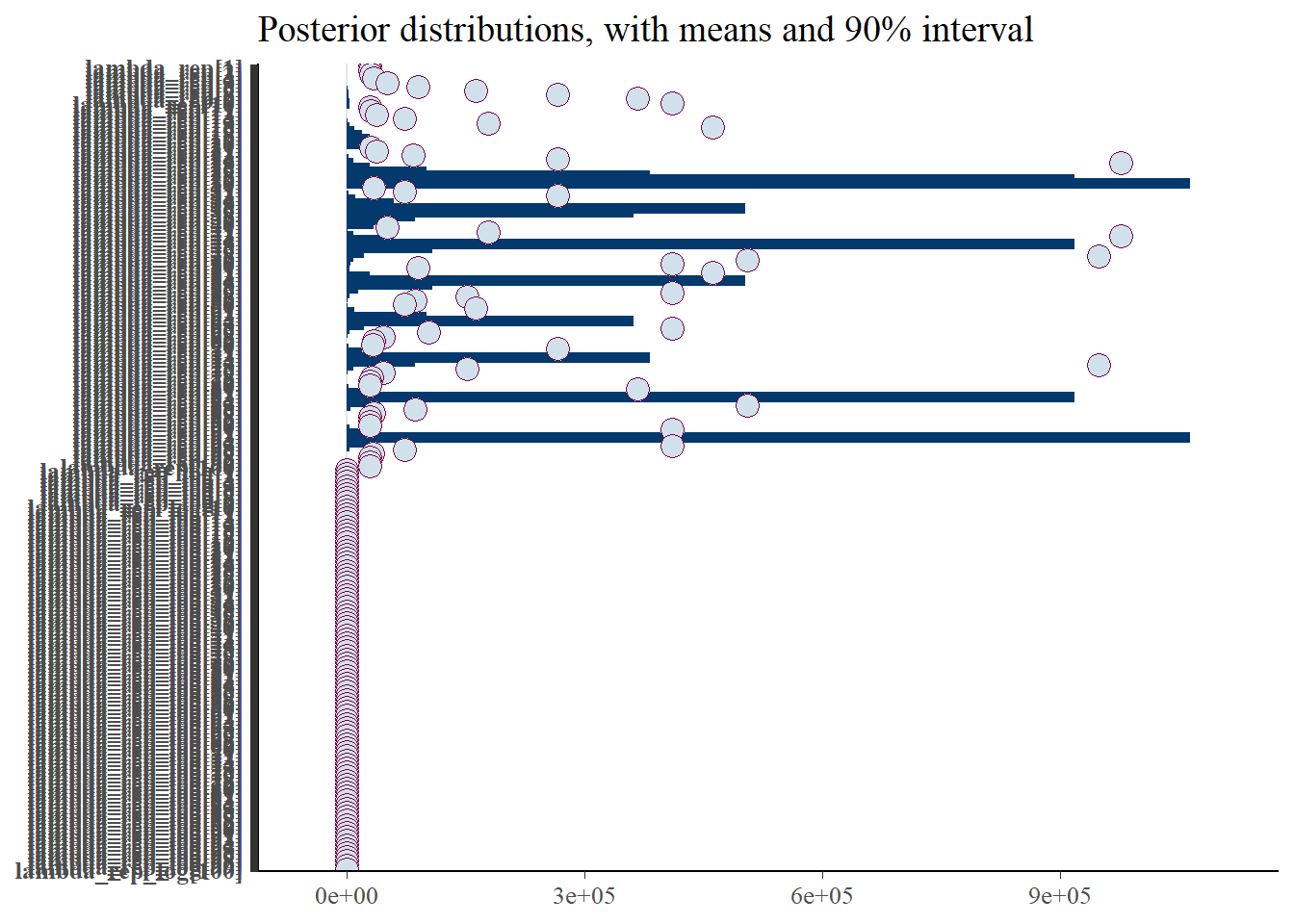

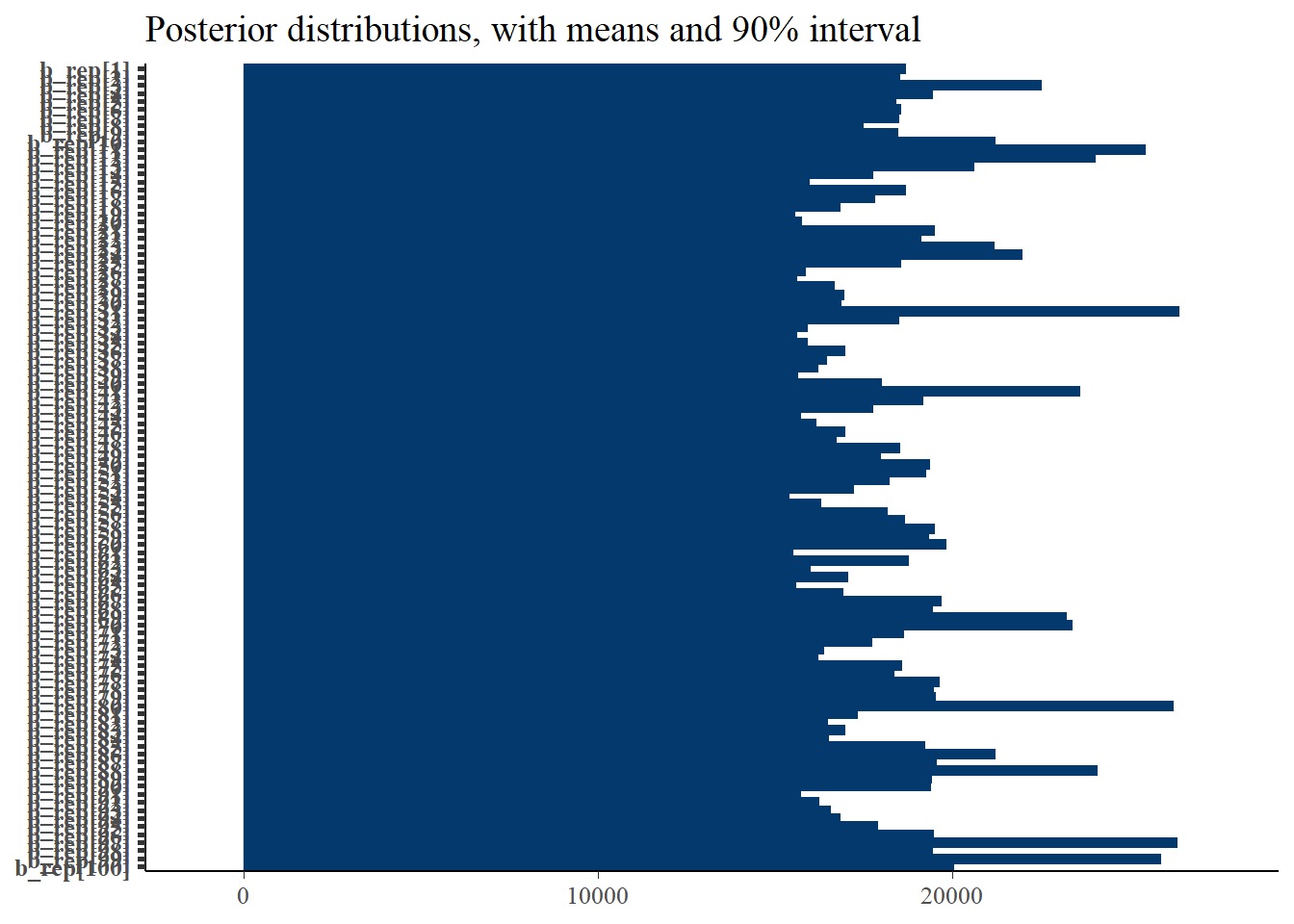

plot_title <- ggtitle(paste("Posterior distributions, with means and 90% interval"))

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", regex_pars = generated_vars[1]) + plot_title

print(p_post)

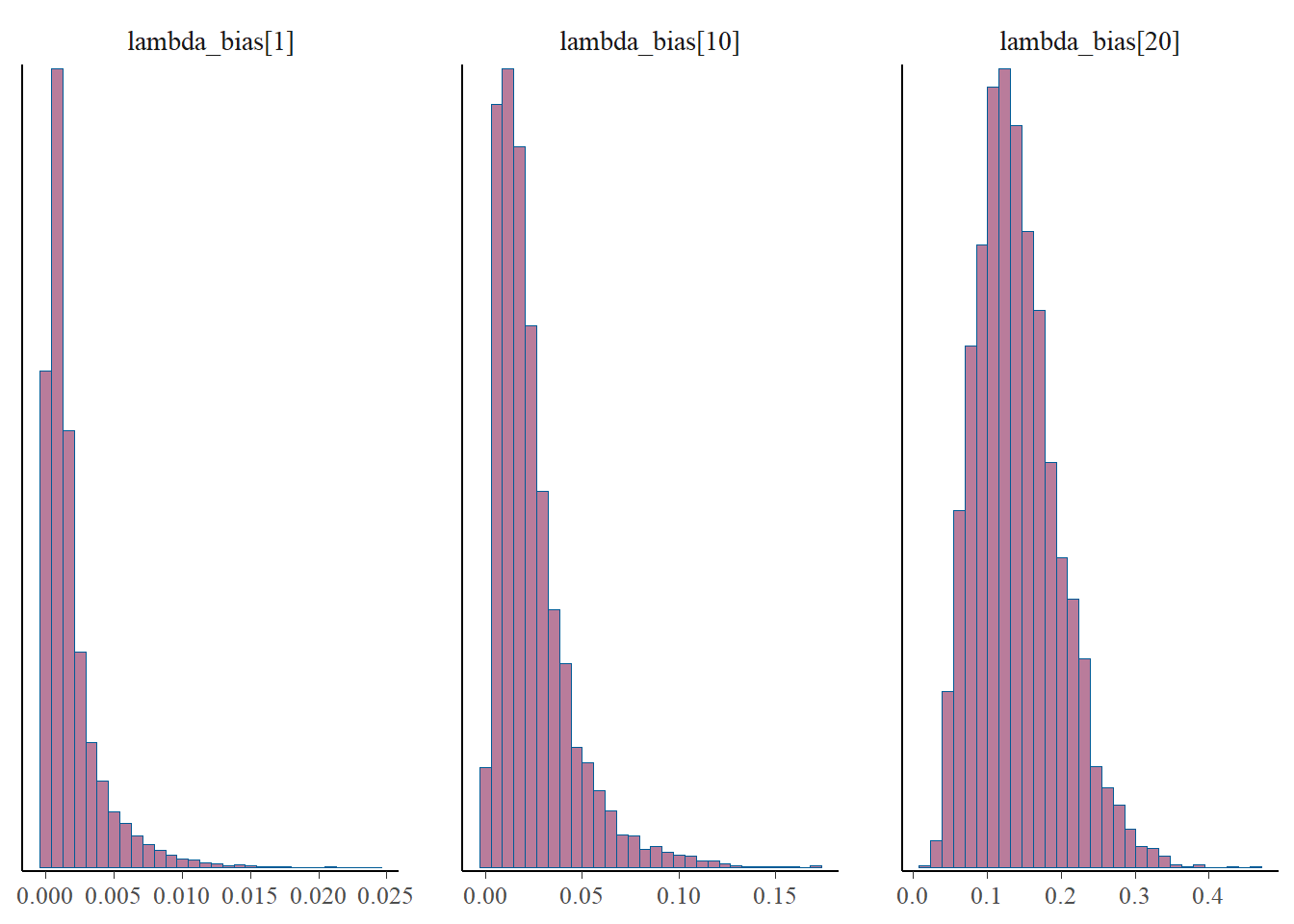

The intervals shown above are for the thinned bias. These are relatively reasonable, as stated.

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", regex_pars = generated_vars[2]) + plot_title

print(p_post)Warning: Removed 37 rows containing missing values (`geom_point()`).

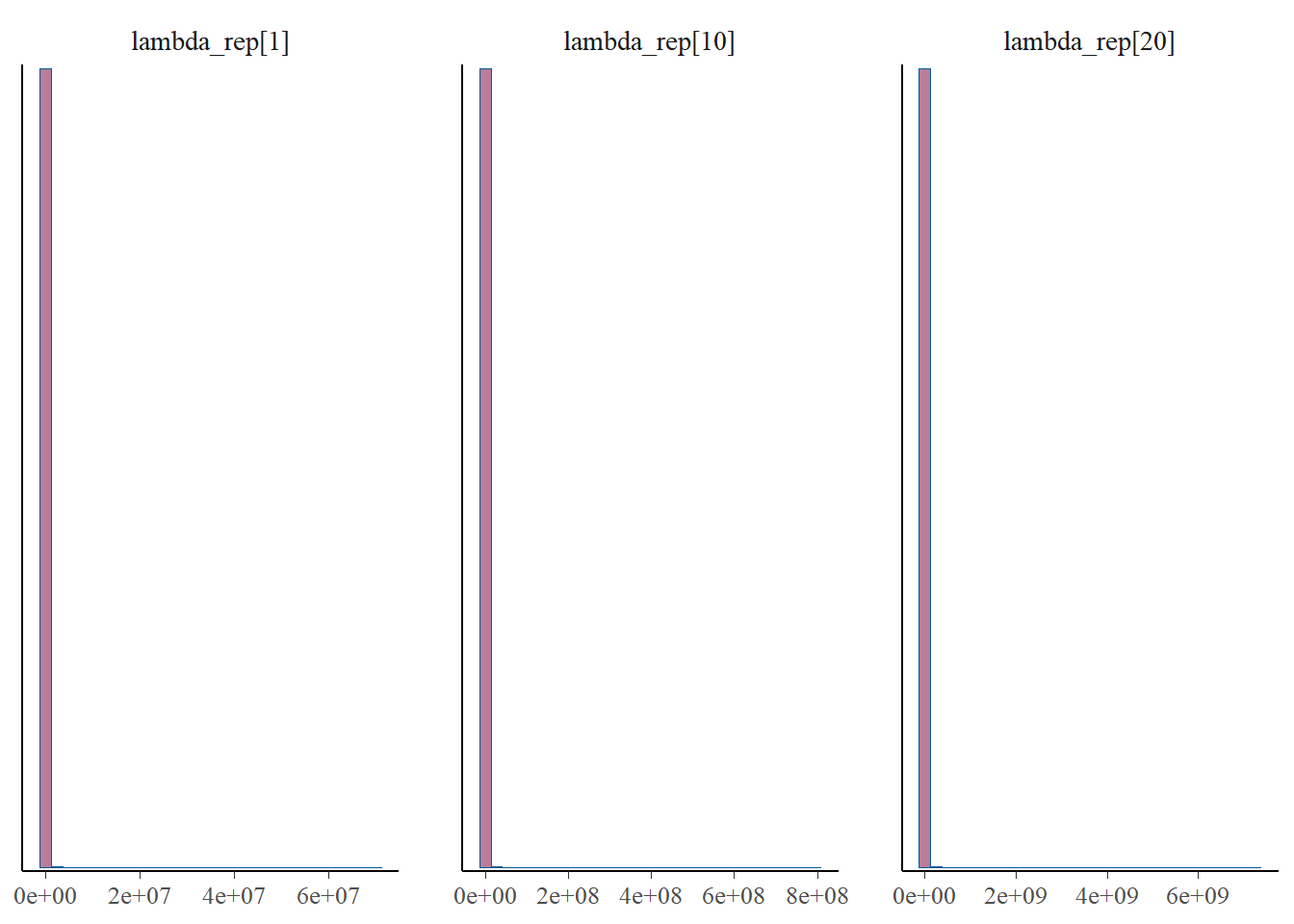

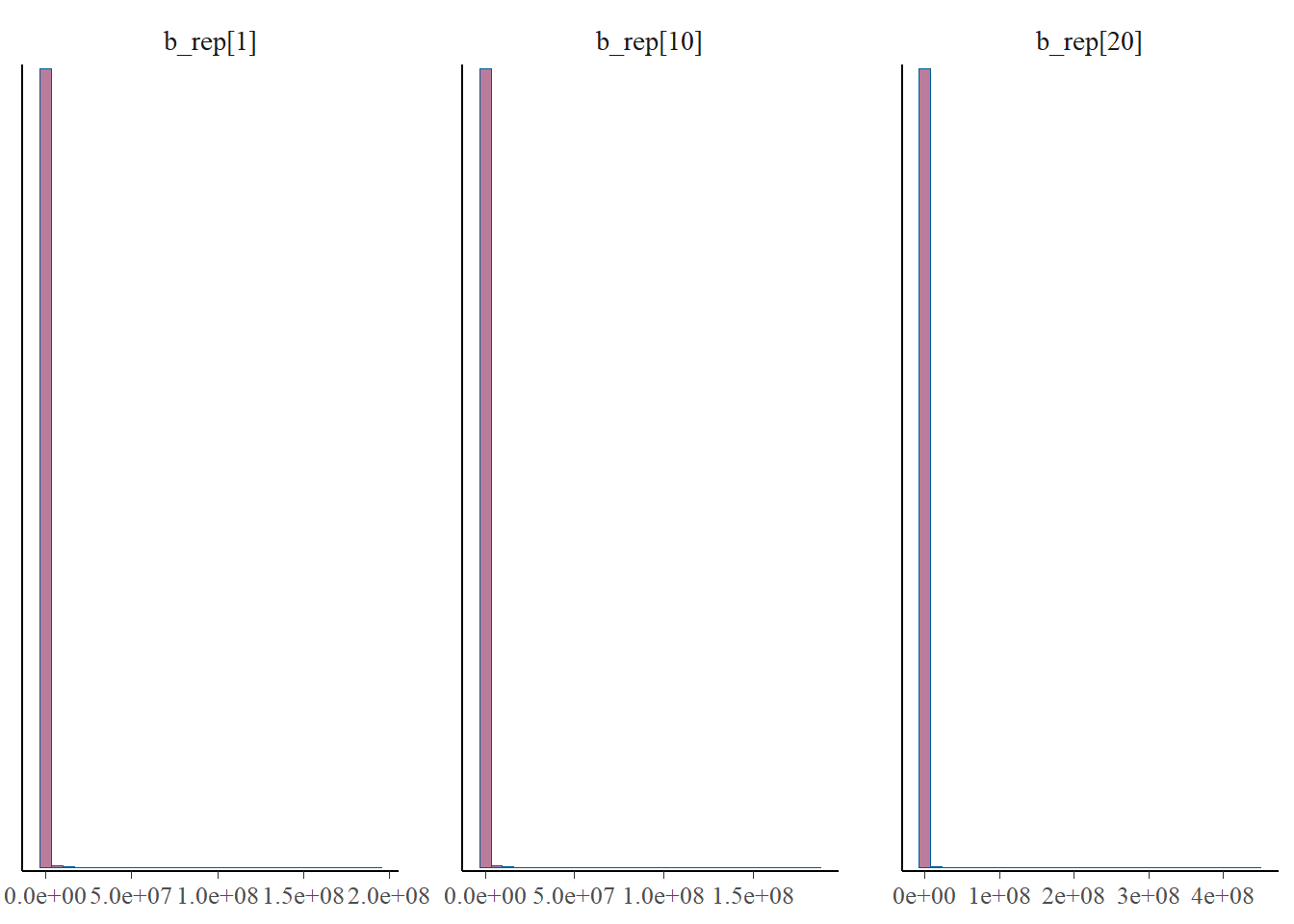

However, the intervals for the intensity (unthinned) are unrealistic, due to the unindentifiable parameters.

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", regex_pars = generated_vars[3]) + plot_title

print(p_post)Warning: Removed 100 rows containing missing values (`geom_point()`).

And finally, the intervals of the generated bias is also not correct.

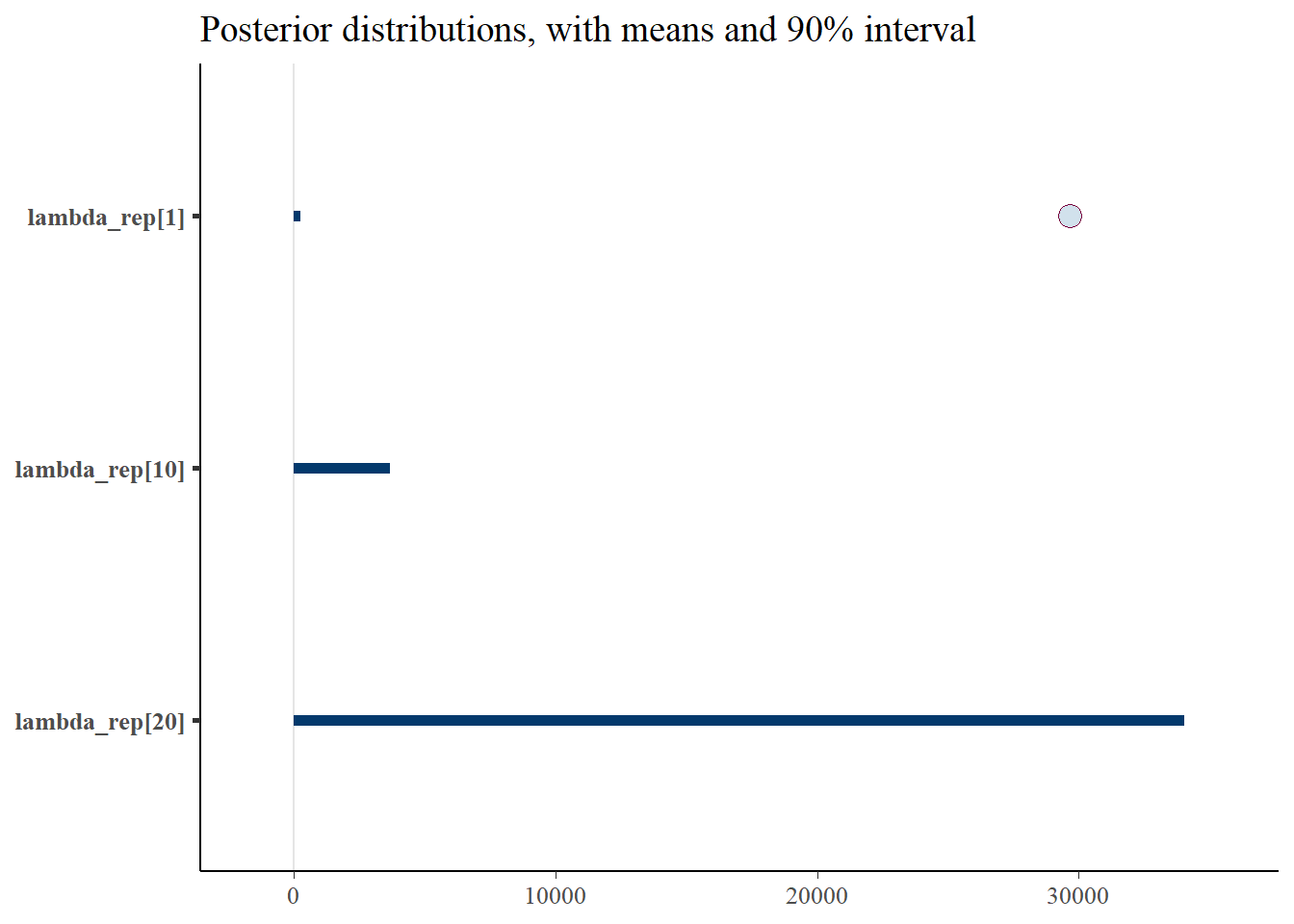

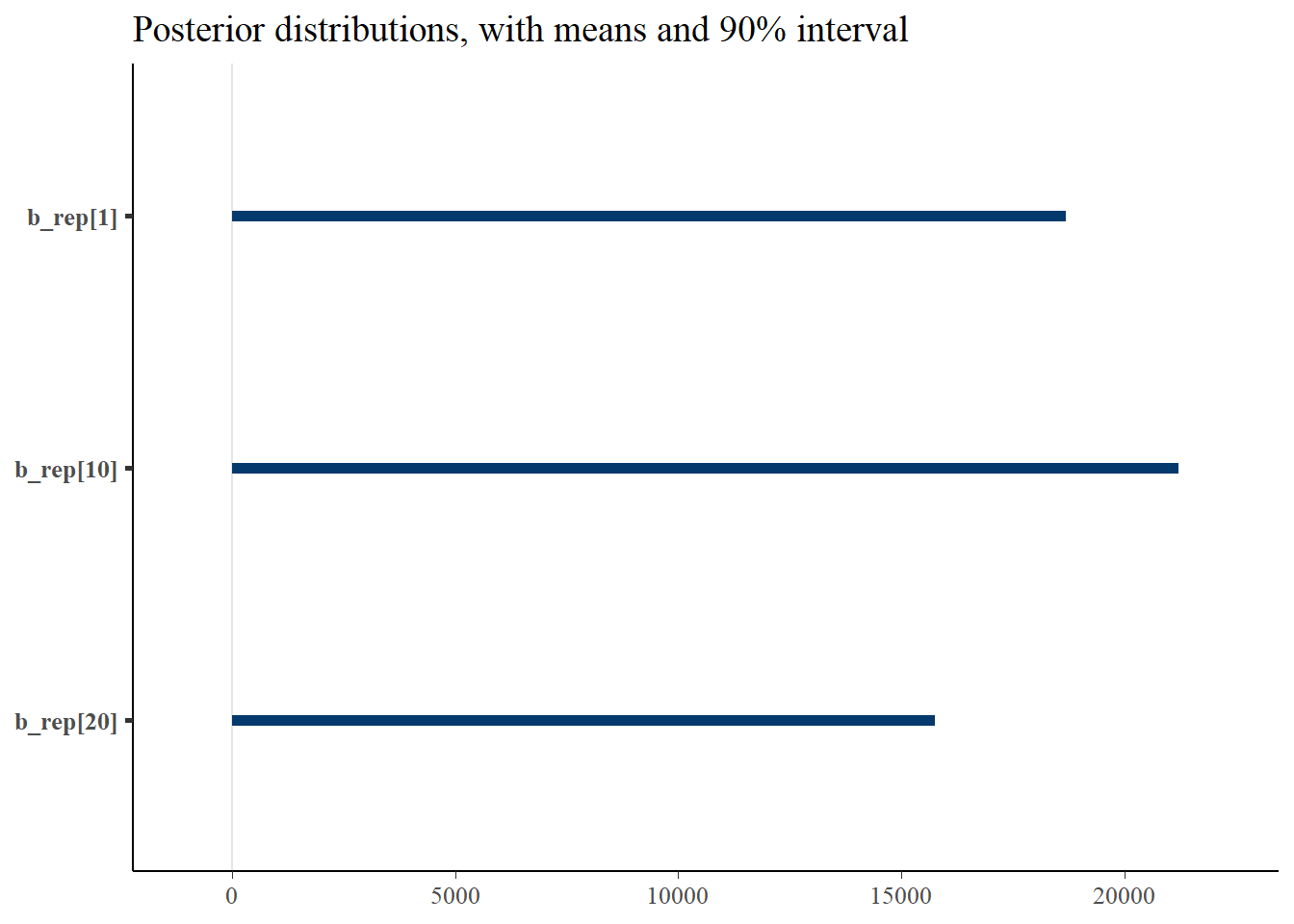

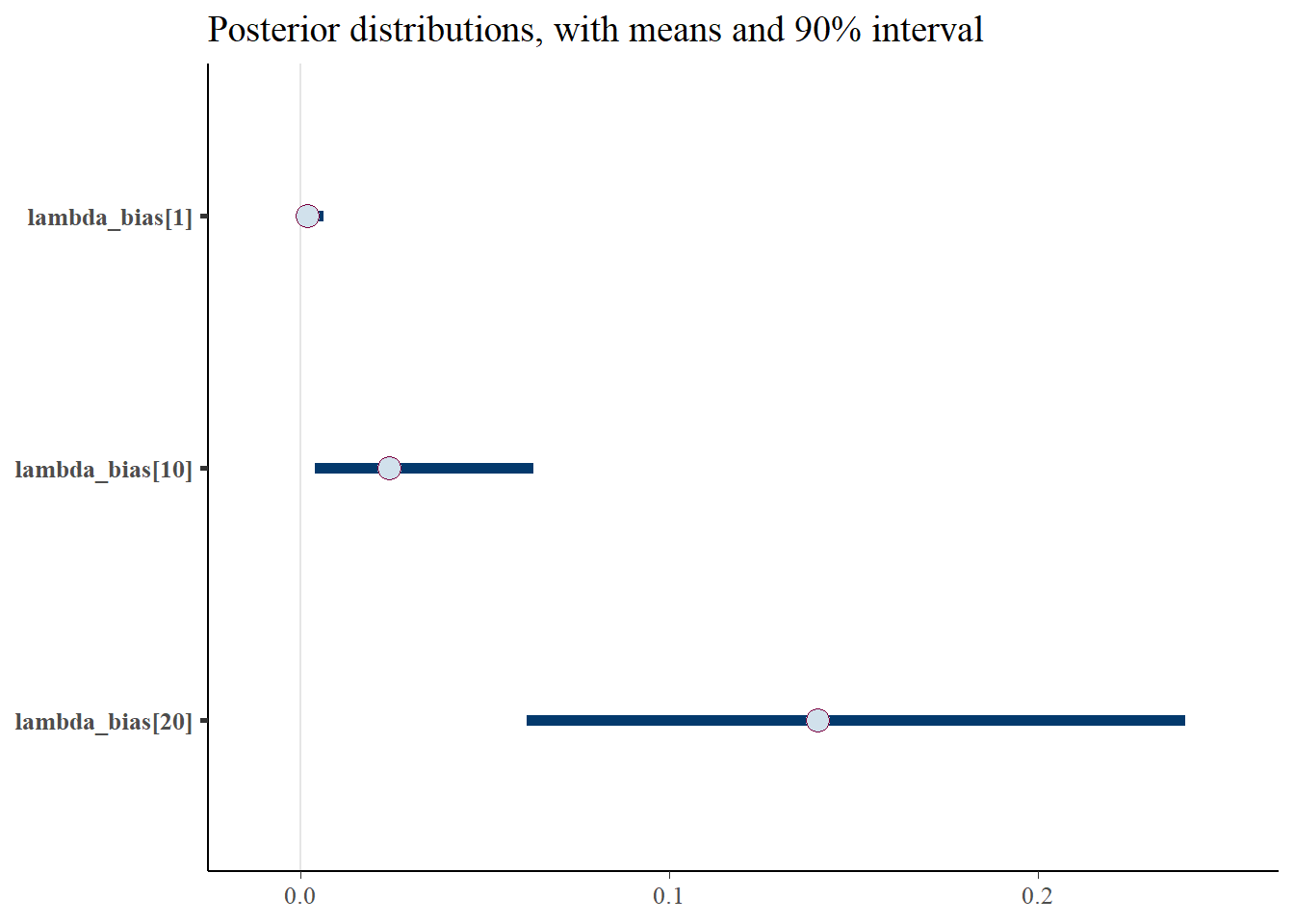

Then we look at the intervals and histograms of the posterior chains for just a few chains.

# The gen parameters for just a few of the sites

gen_lambda <- c('lambda_rep[1]', 'lambda_rep[10]', 'lambda_rep[20]')

gen_lb <- c('lambda_bias[1]', 'lambda_bias[10]', 'lambda_bias[20]')

gen_b <- c('b_rep[1]', 'b_rep[10]', 'b_rep[20]')

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", pars = gen_lambda) + plot_title

print(p_post)Warning: Removed 2 rows containing missing values (`geom_point()`).

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", pars = gen_b) + plot_title

print(p_post)Warning: Removed 3 rows containing missing values (`geom_point()`).

p_post <- mcmc_intervals(fit$draws(), prob = 0.9, point_est="mean", pars = gen_lb) + plot_title

print(p_post)

p_post <- mcmc_hist(fit$draws(), pars = gen_lambda)

print(p_post)`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

p_post <- mcmc_hist(fit$draws(), pars = gen_b)

print(p_post)`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

p_post <- mcmc_hist(fit$draws(), pars = gen_lb)

print(p_post)`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

# Look at counts for each site

#gen_y <- c("y_rep[1]", "y_rep[10]", "y_rep[20]")

#p_post <- mcmc_hist(fit$draws(), pars=gen_y)

#print(p_post)From this we notice that the intervals for some of the generated lambdas and biases are wide, but not for the thinned values. But even for those that have wide intervals, nearly all of the chain values in the histograms are the same. This is because it’s only a few values that cause the mean to be biased, due to the correlation of the parameters.

The take away is that the mean for the generated quantities is very skewed by the occassional large value. This high skew is caused by the correlation between b and l, since these parameters are not estimable between themselves. Therefore, we arrive at reasonable estimates for b*l since this is draw specific (where when one is high the other is low), but the overall mean for either b or l over multiple draws cannot be trusted. This is clear from the histograms of \(\lambda\) and the bias, where most of the values take on very low values, but do fluctuate higher. And when one goes higher, the other goes lower, which I’d like to check a specific example of, for one draw.

5.5 Diagnostics

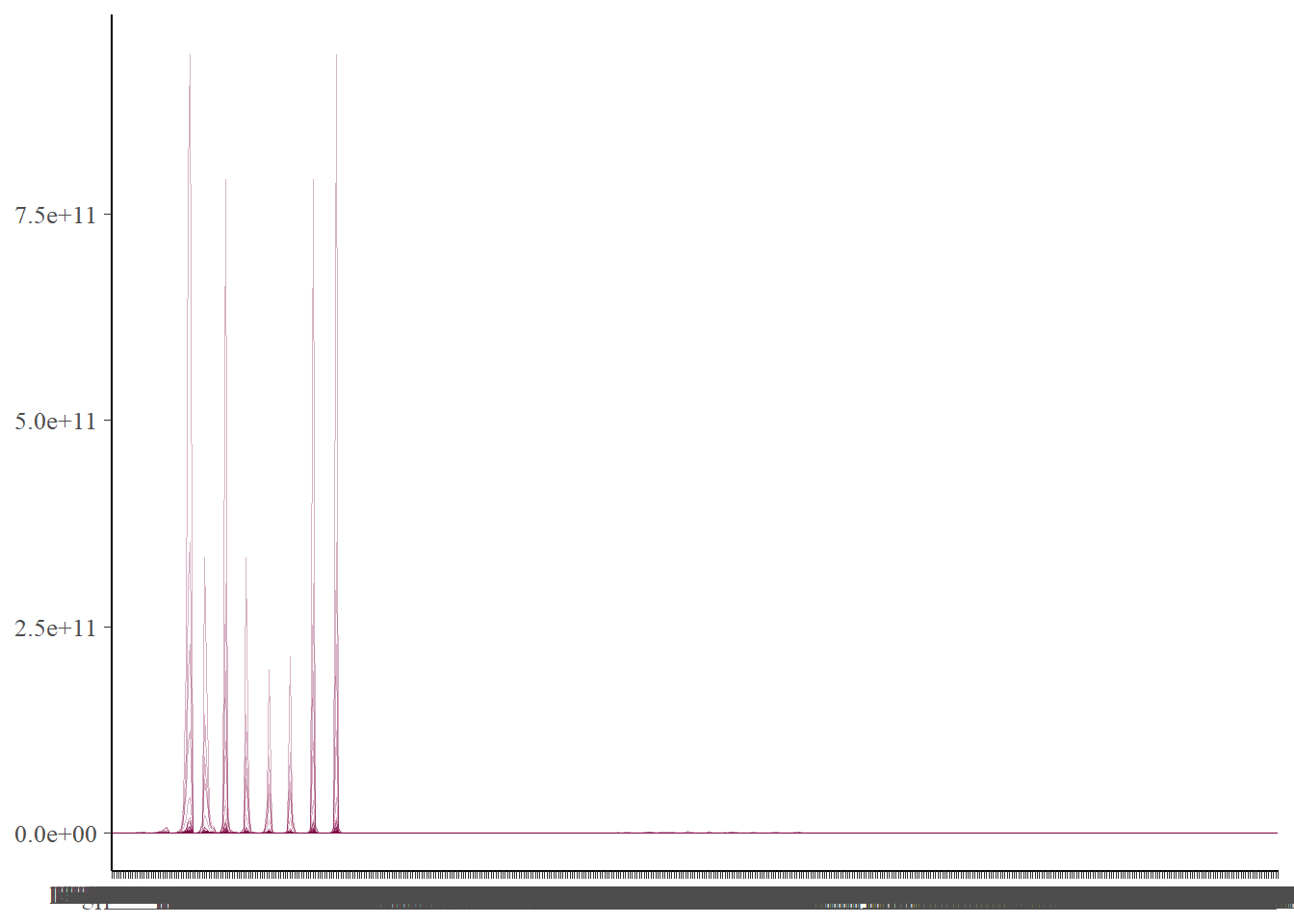

Finally, it is possible to look at a variety of NUTS diagnostics metrics. I am not going to go into much detail here.

nuts_fit <- nuts_params(fit)

mcmc_parcoord(fit$ draws(), np=nuts_fit)

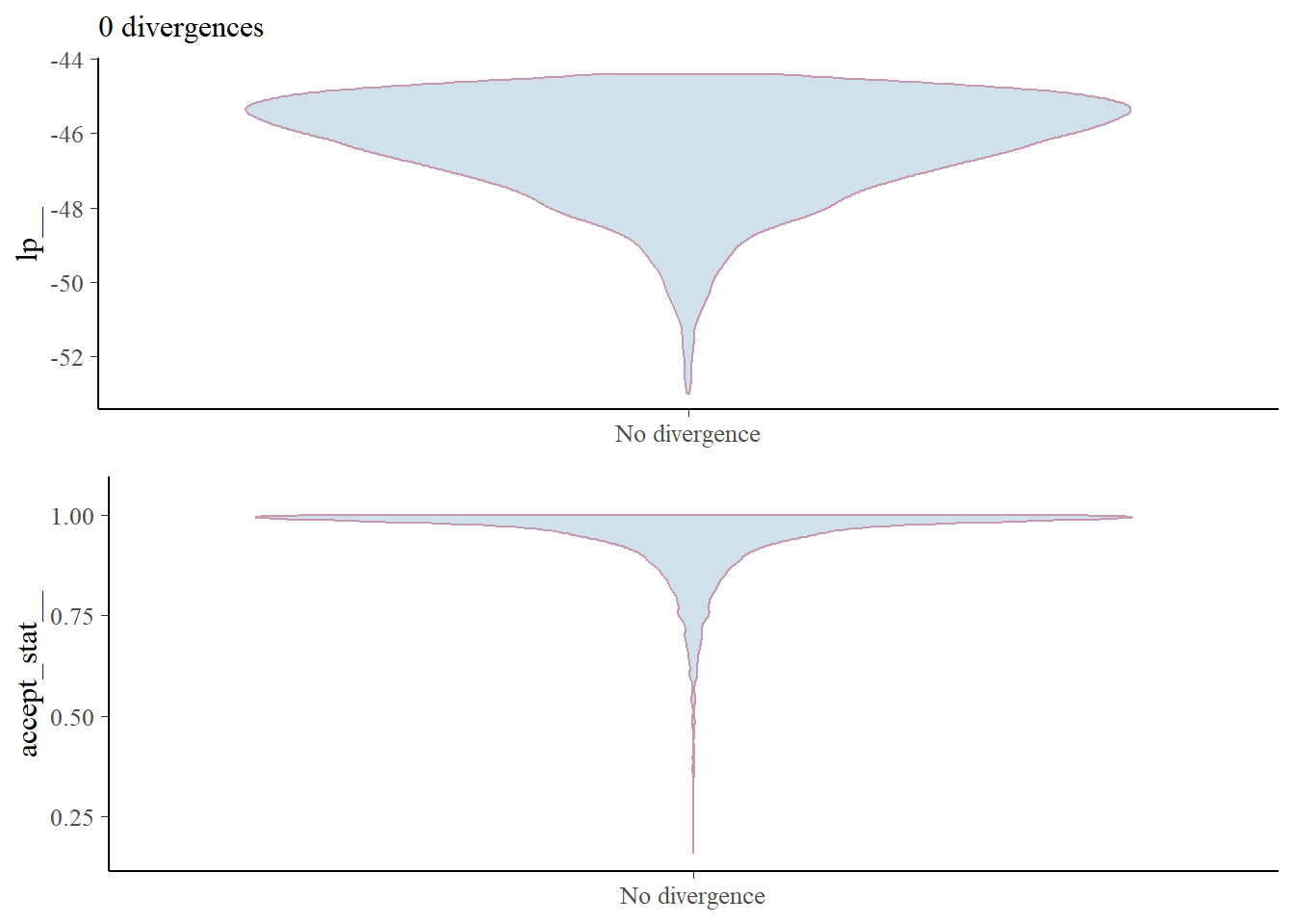

mcmc_nuts_divergence(nuts_fit, log_posterior(fit))

The parcord plot shows 1 line per iteration of the sampler. We can see the values each sampled parameter takes on, which allows us to notice the extent that correlation has on the sampled values. If there are any divergences in the sampling, these will show up in red and help us to pick out the parameters that may be contributing. to this.

There are no divergences so we don’t need to worry.

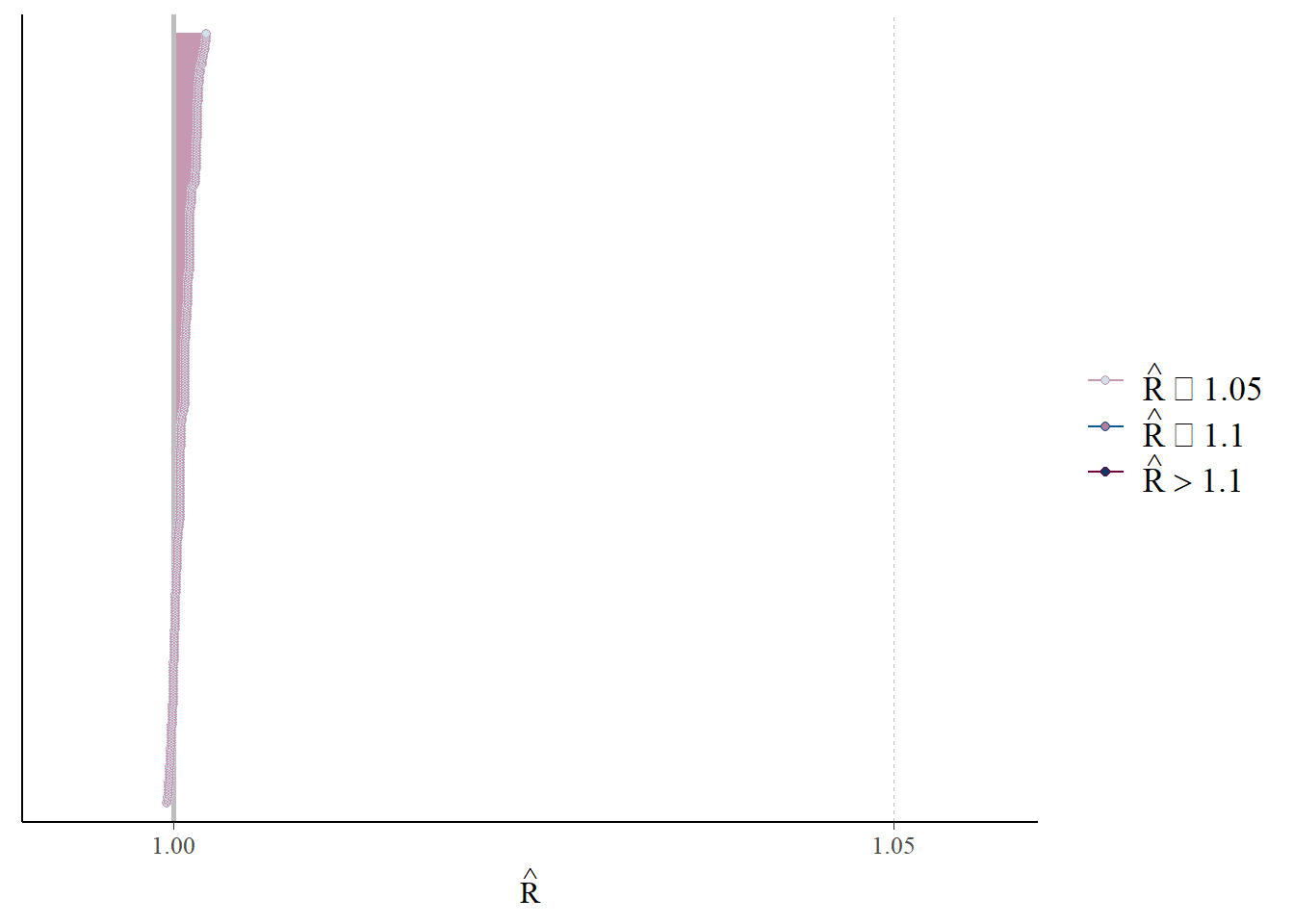

mcmc_rhat(rhat(fit))

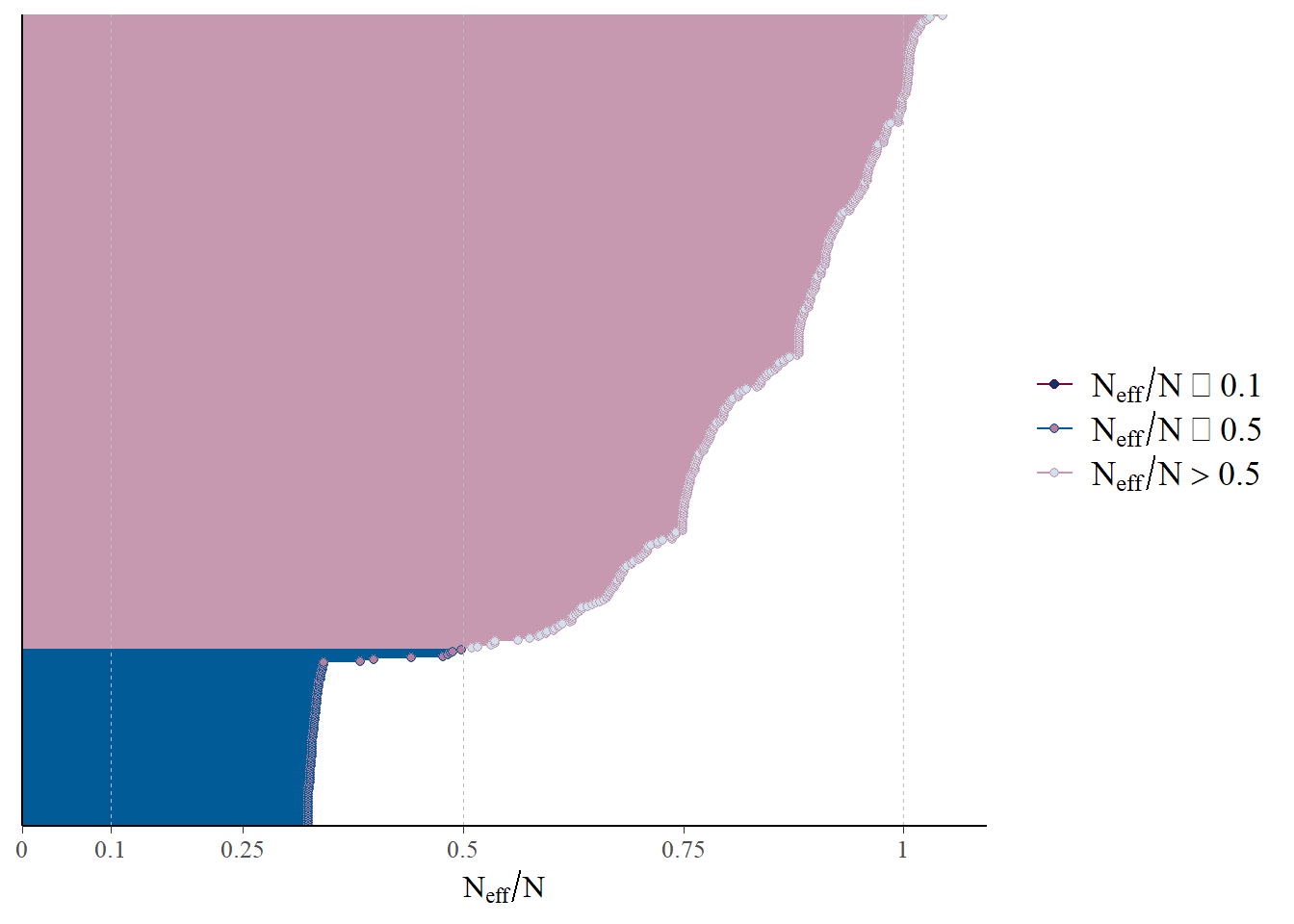

mcmc_neff(neff_ratio(fit))

#mcmc_nuts_energy(nuts_fit, binwidth=1/2)

#mcmc_nuts_acceptance(nuts_fit, log_posterior(fit))R hat, which compares between and within chain estimates, looks good.

“neff” is the effective sample size estimates the number of independent samples, after accounting for autocorrelation in the chains. The figure here shows there are a reasonable number of independent samples, though the threshold is somewhat arbitrary.

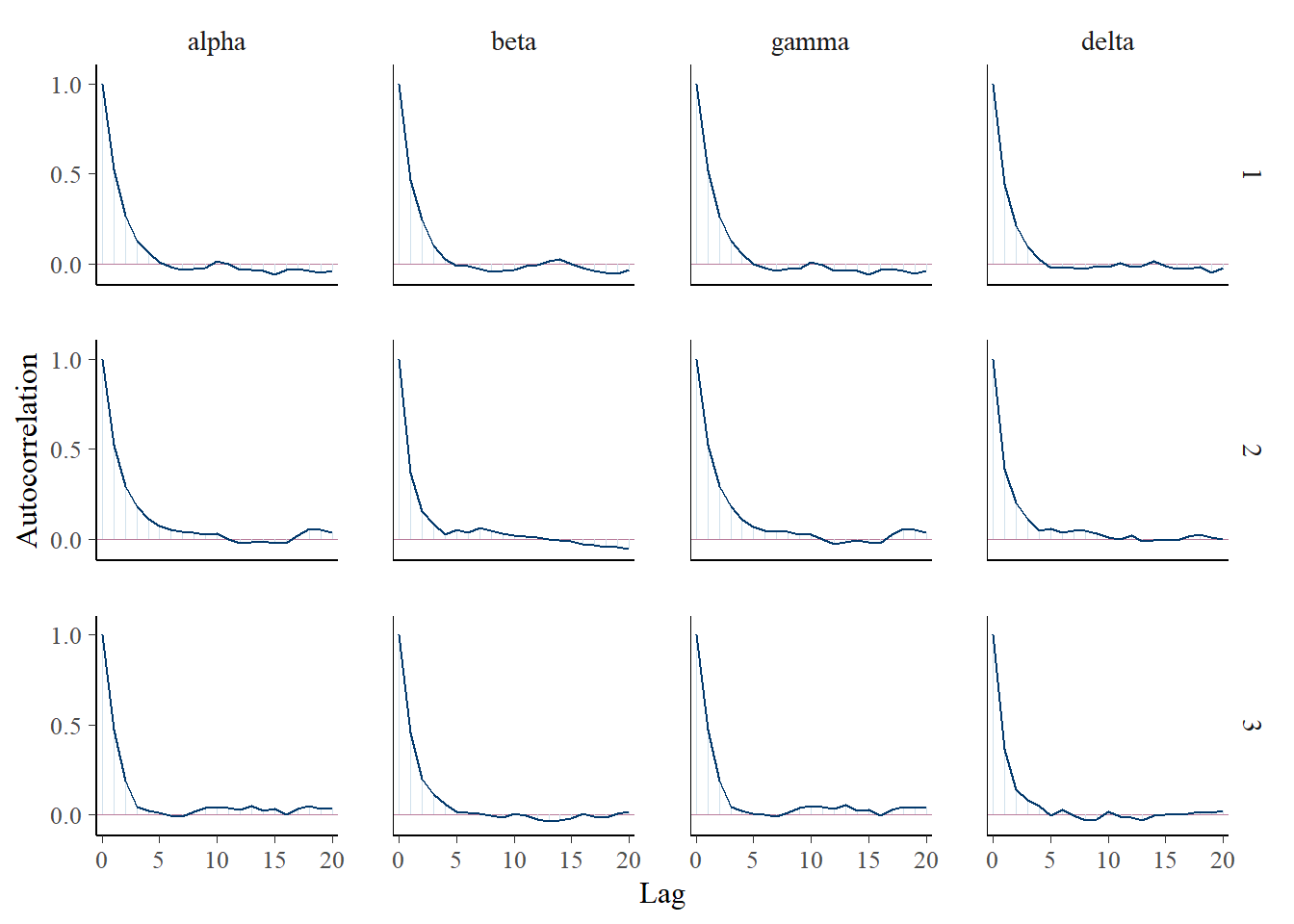

mcmc_acf(fit$draws(), pars=c('alpha', 'beta', 'gamma', 'delta'))

Autocorrelation in the chains looks well-handled.

fit$cmdstan_diagnose()Processing csv files: C:/Users/msavery/AppData/Local/Temp/RtmpG2BpUK/thinned_poisson_process-202405241050-1-52106e.csv, C:/Users/msavery/AppData/Local/Temp/RtmpG2BpUK/thinned_poisson_process-202405241050-2-52106e.csv, C:/Users/msavery/AppData/Local/Temp/RtmpG2BpUK/thinned_poisson_process-202405241050-3-52106e.csv

Checking sampler transitions treedepth.

Treedepth satisfactory for all transitions.

Checking sampler transitions for divergences.

No divergent transitions found.

Checking E-BFMI - sampler transitions HMC potential energy.

E-BFMI satisfactory.

Effective sample size satisfactory.

Split R-hat values satisfactory all parameters.

Processing complete, no problems detected.# Leave loo out.

#loo_results <- fit$loo(variables="lp__", cores=4)

#print(loo_results)It appears we have no additional problems in the models according the the Stan diagnostics.

5.6 LGCP

We next move on to incorporating spatial correlation. To do so, we fit a Log Gaussian Cox Process (LGCP). Where before we specified the intensity as \[ \lambda(s) = \theta(s)b(s) = \exp[\alpha + \beta'x(s) + \gamma + \delta'z(s)] \] we now add a Gaussian process to incorporate spatial correlation between sites \[ \lambda(s) = \theta(s)b(s) = \exp[\alpha + \beta'x(s) + \gamma + \delta'z(s) + w(s)] \] so that the expected value for quadrat \(A\) will be the integral over the quadrat: \[ \Delta(A) = \int_A\lambda(s)ds = \int_A \exp[\alpha + \beta'x(s) + \gamma + \delta'z(s) + w(s)]ds \] We make the same approximations as before based on our limited covariate resolution.

While the topic of LGCPs on its own is quite interesting and deserves its own treatment, for now we examine its behavior only as an adjustment to the NHPP. In a future post I will discuss approximations to the LGCP, which are quite important as fitting the spatial correlation matrix is computationally intensive as the number of locations increases. If we want to apply the LGCP to developing optimal designs (another future topic), we will need an approximation.

For now, I proceed with fitting the model in Stan.

model_string <- log_gaussian_cox_process

write(model_string, model_path)

# Here we need to use the distance matrix

data = list(N = sites, X = grid_points$aux_x, Z = grid_points$aux_z, y = sim_data$Y[data_reps,], D = distance_mat)

model <- cmdstan_model(model_path)

lgcp_fit <- model$sample(data=data, seed=13, chains=3, iter_sampling=2000, iter_warmup=200, thin = 10)Having fit the LGCP we can examine the parameters

lgcp_fit$summary(variables=c('alpha', 'beta', 'gamma', 'delta'))# A tibble: 4 × 10

variable mean median sd mad q5 q95 rhat ess_bulk ess_tail

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 alpha -1.20 -1.19 0.746 0.800 -2.39 0.0364 1.00 636. 599.

2 beta 1.49 1.50 0.361 0.352 0.889 2.08 0.999 612. 602.

3 gamma -1.20 -1.25 0.753 0.794 -2.40 0.0336 1.00 536. 447.

4 delta 0.0336 0.0490 0.371 0.394 -0.587 0.622 1.00 642. 631.posterior <- lgcp_fit$draws()

color_scheme_set("mix-blue-pink")

p_trace <- mcmc_trace(posterior, pars = c("alpha", "beta", "gamma", "delta"),

facet_args = list(nrow = 2, labeller = label_parsed))

print(p_trace + facet_text(size = 15))

The chains don’t look perfect and it would probably be better to run the sampling algorithm for longer. But for now this is ok.

Then check the posterior intervals:

plot_title <- ggtitle(paste("Posterior distributions, with means and 90% interval"))

p_post <- mcmc_areas(posterior, prob = 0.9, point_est="mean", regex_pars = c("alpha", "beta")) + plot_title

print(p_post)

plot_title <- ggtitle(paste("Posterior distributions, with means and 90% interval"))

p_post <- mcmc_areas(posterior, prob = 0.9, point_est="mean", regex_pars = c("gamma", "delta")) + plot_title

print(p_post)

mcmc_intervals(posterior, pars=c('alpha', 'beta', 'gamma', 'delta'))

#mcmc_hist(fit$draws(), pars = c('alpha', 'beta', 'gamma', 'delta'))

plot_title <- ggtitle("Parameter posterior sample correlation")

p_post <- mcmc_pairs(posterior, pars=c('alpha', 'beta', 'gamma', 'delta'))

print(p_post)

Interestingly, the \(\alpha\) and \(\gamma\) parameters are somewhat identified. We can see that the parameters are nearly recovered, though biased slightly towards 0. Additionally, The credible intervals are much smaller than in the Poisson point process, indicating that the parameters are indentifiable, though the scatter plots still show some correlation.

Finally we take a look at the posterior predictions for \(\lambda\), \(b\), and \(\lambda\cdot b\).

generated_vars <- c('lambda_bias', 'lambda_rep', 'b_rep', 'y_rep')

lambda_thinned <- lgcp_fit$summary(variables=generated_vars[1])$mean

lambda_rep <- lgcp_fit$summary(variables=generated_vars[2])$mean

bias_rep <- lgcp_fit$summary(variables=generated_vars[3])$mean

y_ppd <- lgcp_fit$summary(variables=generated_vars[4])$mean

ppd_df <- data.frame(x=grid_points$x, y=grid_points$y, y_rep=y_ppd, lt=lambda_thinned, l=lambda_rep, b=bias_rep)

p <- ggplot(ppd_df, aes(x, y, fill=y_rep)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("PPD counts per site")

print(p)

p <- ggplot(ppd_df, aes(x, y, fill=lt)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Thinned intensity per site")

print(p)

p <- ggplot(ppd_df, aes(x, y, fill=l)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Intensity per site")

print(p)

p <- ggplot(ppd_df, aes(x, y, fill=b)) +

geom_tile() +

scale_fill_viridis(discrete=FALSE) +

ggtitle("Bias per site")

print(p)

This time, we get realistic estimates of \(b\) and \(\lambda\), before thinning. Inducing the correlation structure allows us to better recover the parameters, albeit at a high computational cost.

Having fit the Poisson Process and LGCP to to simulated PO data and observed the behavior of each model, we have met the goal we set out to accomplish. Hopefully this can serve as a useful resource to anyone interested the topic.